I tried to reproduce the issue and it is working fine for me.

Please check the following points while creating the pipeline.

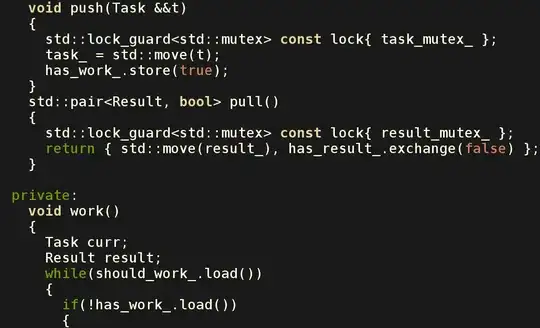

- Check if you have pasted storage account connection string at line number 6 in main.py file

- You need to create a Blob Storage and a Batch Linked Services in the Azure Data Factory(ADF). These linked services will be required in “Azure Batch” and “Settings” Tabs when configure ADF Pipeline. Please follow below snapshots to create Linked Services.

In ADF Portal, click on left ‘Manage’ symbol and then click on +New to create Blob Storage linked service.

Search for “Azure Blob Storage” and then click on Continue

Fill the required details as per your Storage account, test the connection and then click on apply.

Similarly, search for Azure Batch Linked Service (under Compute tab).

Fill the details of your batch account, use the previously created Storage Linked service under “Storage linked service name” and then test the connection. Click on save.

Later, when you will create custom ADF pipeline, under “Azure Batch” Tab, provide the Batch Linked Service Name.

Under “Settings” Tab, provide the Storage Linked Service name and other required information. In "Folder Path", provide the Blob name where you have main.py and iris.csv files.

Once this is done, you can Validate, Debug, Publish and Trigger the pipeline. Pipeline should run successfully.

Once pipeline ran successfully, you will see the iris_setosa.csv file in your output Blob.