I've been trying to find out the top-3 highest frequency restaurant names under each type of restaurant

The columns are:

rest_type - Column for the type of restaurant

name - Column for the name of the restaurant

url - Column used for counting occurrences

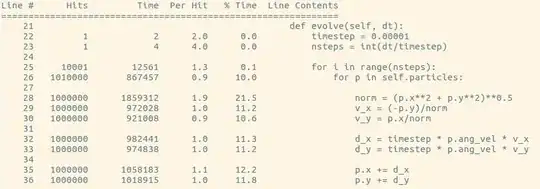

This was the code that ended up working for me after some searching:

df_1=df.groupby(['rest_type','name']).agg('count')

datas=df_1.groupby(['rest_type'], as_index=False).apply(lambda x : x.sort_values(by="url",ascending=False).head(3))

['url'].reset_index().rename(columns={'url':'count'})

The final output was as follows:

I had a few questions pertaining to the above code:

How are we able to groupby using rest_type again for datas variable after grouping it earlier. Should it not give the missing column error? The second groupby operation is a bit confusing to me.

What does the first formulated column level_0 signify? I tried the code with as_index=True and it created an index and column pertaining to rest_type so I couldn't reset the index. Output below:

Thank you