I have expanded on the excellent answer from Ann Zen.

There are two variables that you will have to tweak:

threshold: contours with an area below this threshold will be discardedrow_amt: the number of rows that there are in your image

The concept that Ann Zen used is to divide the image into n segments along the y axis, forming n rows. For each segment of the image, find every shape that has its center in that segment. Finally, order the shapes in each segment by their x coordinate.

- Import the necessary libraries. I have a

DEBUG flag that will show some extra features which can help during debugging.

import cv2

import numpy as np

from collections import OrderedDict

DEBUG = False

- Define a function that will take in an image input and return the image processed to something that will allow python to later retrieve their contours:

def process_img(image):

# grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

se = cv2.getStructuringElement(cv2.MORPH_RECT, (8, 8))

bg = cv2.morphologyEx(gray, cv2.MORPH_DILATE, se)

out_gray = cv2.divide(gray, bg, scale=255)

out_binary = cv2.threshold(out_gray, 0, 255, cv2.THRESH_OTSU)[1]

# binary

(ret, thresh) = cv2.threshold(out_binary, 127, 255,

cv2.THRESH_BINARY_INV)

# opening

kernel = np.ones((3, 3), np.uint8)

opening = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel)

# dilation 40 for segmenting words 15 for letters

kernel = np.ones((5, 40), np.uint8)

img_dilation = cv2.dilate(opening, kernel, iterations=1)

return img_dilation

- Define a function that will return the center of a contour:

def get_centeroid(cnt):

length = len(cnt)

sum_x = np.sum(cnt[..., 0])

sum_y = np.sum(cnt[..., 1])

return int(sum_x / length), int(sum_y / length)

- Define a function that returns all the contours of which the area is above a threshold:

def get_contours(processed_img, threshold):

(contours, hierarchies) = cv2.findContours(processed_img,

cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

return [cnt for cnt in contours if cv2.contourArea(cnt) > threshold]

- Define a function that will take in a list of contours and return the center points of the shapes found in the image:

def get_centers(contours):

return [get_centeroid(cnt) for cnt in contours]

- Define a function that will find the upper and lower bound along the y axis of the contours. Based on the amount of rows you provide, it splits the area between these bounds in

amt_row rows of height row_h.

def get_bounds(contours, img, row_amt):

min_y = img.shape[0]

max_y = 0

for ctr in contours:

(x, y, w, h) = cv2.boundingRect(ctr)

if y < min_y:

min_y = y

if y + h > max_y:

max_y = y + h

row_h = (max_y - min_y) / row_amt

if DEBUG:

line_thickness = 2

x1 = 0

x2 = img.shape[1]

for i in range(row_amt + 1):

y1 = y2 = int(min_y + row_h * i)

cv2.line(img, (x1, y1), (x2, y2), (0, 255, 0),

thickness=line_thickness)

return (min_y, max_y, row_h)

- Define a function that will take in an image array,

img, the number of segments for the image, row_amt, and a threshold. Contours with an area below this threshold will be discarded. It will return row_amt OrderedDicts. Each OrderedDict contains as keys the centers of its corresponding row, sorted by their x coordinates and the value for each key is its corresponding contour.

def get_rows(img, row_amt, threshold):

processed_img = process_img(img)

contours = get_contours(processed_img, threshold)

centers = get_centers(contours)

centers_to_contours = dict(zip(centers, contours))

centers = np.array(centers)

min_y, max_y, row_h = get_bounds(contours, img, row_amt)

for i in range(row_amt):

f = centers[:, 1] - min_y - row_h * i

a = centers[(f < row_h) & (f > 0)]

c = a[a.argsort(0)[:, 0]]

od = OrderedDict()

for center in map(tuple, c):

od[center] = centers_to_contours[center]

yield od

- Read in the image, loop over the rows and in each row loop over the centers/contours and draw the rectangles and numbers.

img = cv2.imread('RrU0o.jpg')

count = 0

for row in get_rows(img, row_amt=7, threshold=1330):

if DEBUG:

centerpoints = np.array(list(row.keys()))

cv2.polylines(img, [centerpoints], False, (255, 0, 255), 2)

for ((x, y), ctr) in row.items():

count += 1

if DEBUG:

cv2.circle(img, (x, y), 10, (0, 0, 255), -1)

cv2.putText(img, f'#{count}', (x - 10, y + 5), cv2.FONT_HERSHEY_SIMPLEX, 1.0, (0, 0, 0), 2)

(x, y, w, h) = cv2.boundingRect(ctr)

cv2.rectangle(img, (x, y), (x + w, y + h), (90, 0, 255), 2)

- Finally, show the image:

cv2.imshow("Final", img)

cv2.waitKey(0)

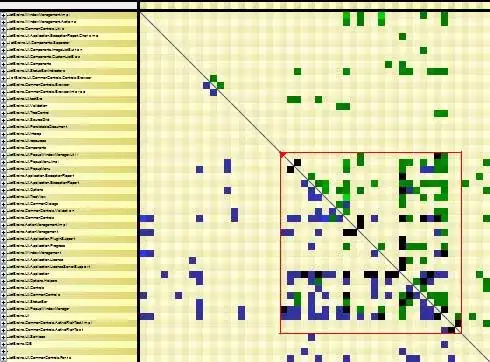

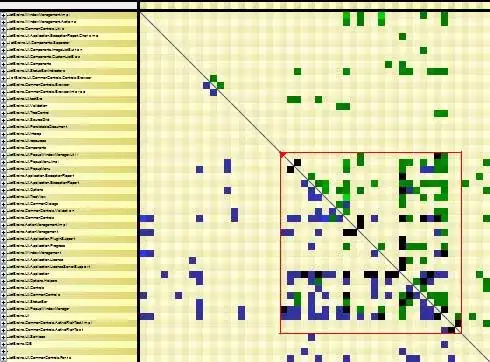

Result:

Result with DEBUG = True.

- Green horizontal lines show the segments

- Red dots show the center of the contours

- Pink line connects the centers in a segment in order

Altogether:

#!/usr/bin/python

import cv2

import numpy as np

from google.colab.patches import cv2_imshow

from collections import OrderedDict

DEBUG = False

def process_img(image):

# grayscale

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

se = cv2.getStructuringElement(cv2.MORPH_RECT, (8, 8))

bg = cv2.morphologyEx(gray, cv2.MORPH_DILATE, se)

out_gray = cv2.divide(gray, bg, scale=255)

out_binary = cv2.threshold(out_gray, 0, 255, cv2.THRESH_OTSU)[1]

# binary

(ret, thresh) = cv2.threshold(out_binary, 127, 255,

cv2.THRESH_BINARY_INV)

# opening

kernel = np.ones((3, 3), np.uint8)

opening = cv2.morphologyEx(thresh, cv2.MORPH_OPEN, kernel)

# dilation 40 for segmenting words 15 for letters

kernel = np.ones((5, 40), np.uint8)

img_dilation = cv2.dilate(opening, kernel, iterations=1)

return img_dilation

def get_centeroid(cnt):

length = len(cnt)

sum_x = np.sum(cnt[..., 0])

sum_y = np.sum(cnt[..., 1])

return (int(sum_x / length), int(sum_y / length))

def get_contours(processed_img, threshold):

(contours, hierarchies) = cv2.findContours(processed_img,

cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

return [cnt for cnt in contours if cv2.contourArea(cnt) > threshold]

def get_centers(contours):

return [get_centeroid(cnt) for cnt in contours]

def get_bounds(contours, img, row_amt):

min_y = img.shape[0]

max_y = 0

for ctr in contours:

(x, y, w, h) = cv2.boundingRect(ctr)

if y < min_y:

min_y = y

if y + h > max_y:

max_y = y + h

row_h = (max_y - min_y) / row_amt

if DEBUG:

line_thickness = 2

x1 = 0

x2 = img.shape[1]

for i in range(row_amt + 1):

y1 = y2 = int(min_y + row_h * i)

cv2.line(img, (x1, y1), (x2, y2), (0, 255, 0),

thickness=line_thickness)

return (min_y, max_y, row_h)

def get_rows(img, row_amt, threshold):

processed_img = process_img(img)

contours = get_contours(processed_img, threshold)

centers = get_centers(contours)

centers_to_contours = dict(zip(centers, contours))

centers = np.array(centers)

(min_y, max_y, row_h) = get_bounds(contours, img, row_amt)

for i in range(row_amt):

f = centers[:, 1] - min_y - row_h * i

a = centers[(f < row_h) & (f > 0)]

c = a[a.argsort(0)[:, 0]]

od = OrderedDict()

for center in map(tuple, c):

od[center] = centers_to_contours[center]

yield od

img = cv2.imread('RrU0o.jpg')

count = 0

for row in get_rows(img, row_amt=7, threshold=1330):

if DEBUG:

centerpoints = np.array(list(row.keys()))

cv2.polylines(img, [centerpoints], False, (255, 0, 255), 2)

for ((x, y), ctr) in row.items():

count += 1

if DEBUG:

cv2.circle(img, (x, y), 10, (0, 0, 255), -1)

cv2.putText(img, f'#{count}', (x - 10, y + 5), cv2.FONT_HERSHEY_SIMPLEX, 1.0, (0, 0, 0), 2)

(x, y, w, h) = cv2.boundingRect(ctr)

cv2.rectangle(img, (x, y), (x + w, y + h), (90, 0, 255), 2)

cv2.imshow("Final", img)

cv2.waitKey(0)