I am trying to apply the perspective information for unshearing the image using open cv . I had converted the image to black and white and then passes it to the model which is working fine . It is displaying the white mask which is actually the output . How can I display its original object of image instead of mask , it is throwing the index error.

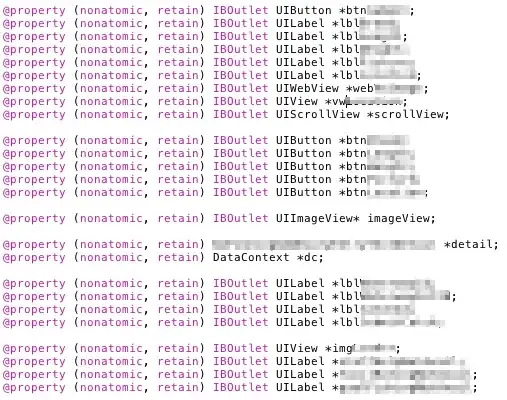

**Code: **

import cv2

import numpy as np

def find_corners(im):

# Find contours in img.

cnts = cv2.findContours(im, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)[-2] # [-2] indexing takes return value before last (due to OpenCV compatibility issues).

# Find the contour with the maximum area (required if there is more than one contour).

c = max(cnts, key=cv2.contourArea)

epsilon = 0.1*cv2.arcLength(c, True)

box = cv2.approxPolyDP(c, epsilon, True)

tmp_im = cv2.cvtColor(im, cv2.COLOR_GRAY2BGR)

cv2.drawContours(tmp_im, [box], 0, (0, 255, 0), 2)

cv2.imshow("tmp_im", tmp_im)

box = np.squeeze(box).astype(np.float32)

# Sorting the points order is top-left, top-right, bottom-right, bottom-left.

# Find the center of the contour

M = cv2.moments(c)

cx = M['m10']/M['m00']

cy = M['m01']/M['m00']

center_xy = np.array([cx, cy])

cbox = box - center_xy # Subtract the center from each corner

ang = np.arctan2(cbox[:,1], cbox[:,0]) * 180 / np.pi # Compute the angles from the center to each corner

# Sort the corners of box counterclockwise (sort box elements according the order of ang).

box = box[ang.argsort()]

# Reorder points: top-left, top-right, bottom-left, bottom-right

print('bbox',box)

coor = np.float32([box[0], box[1], box[3], box[2]])

return coor

input_image2 = cv2.imread("/home/hamza/Desktop/cards/card2.jpeg", cv2.IMREAD_GRAYSCALE) # Read image as Grayscale

input_image2 = cv2.threshold(input_image2, 0, 255, cv2.THRESH_OTSU)[1] # Convert to binary image (just in case...)

# Find the corners of the card, and sort them

orig_im_coor = find_corners(input_image2)

height, width = 450, 350

new_image_coor = np.float32([[0, 0], [width, 0], [0, height], [width, height]])

P = cv2.getPerspectiveTransform(orig_im_coor, new_image_coor)

perspective = cv2.warpPerspective(input_image2, P, (width, height))

cv2.imshow("Perspective transformation", perspective)

cv2.waitKey(0)

cv2.destroyAllWindows()

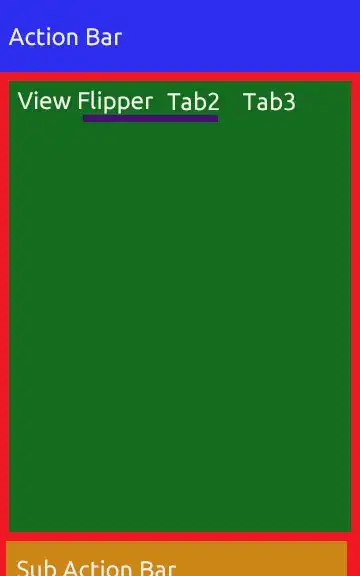

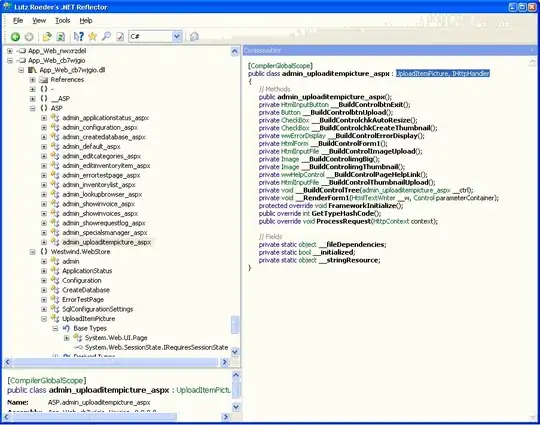

Original Image

Black and white image

Expected Output: The output I am getting is white mask from code which is fine but I want to take the image of that particular mask . How can I access it ?

If it is not possible using this approach , How can I do it from some other approach ?

Edit 1: Code returning the output after unshearing the image as shown below in the image , I have to put that particular mask on that output image which I give it as a input of mask from that specified image

Code returning output now . Below Output image is white please press on the image it will display you otherwise background is also white and it will not proper display you :