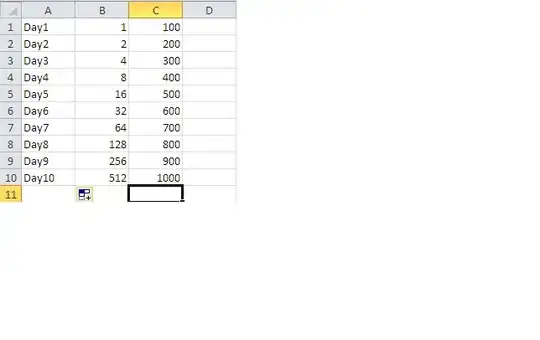

I made an algorithm to measure an object using a reference, like this:

The reference is the frame and the other (AOL) is the desired object. My code obtained this result:

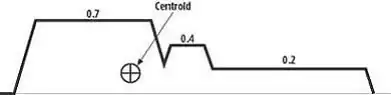

But the real AOL is 78.6. This is because of the perspective/angle of photograph. So I used in my code Deep Learning and I obtained the the reference and AOL mask, and I made a simple calculation based on the number of pixels for each mask to obtain AOL area (cm²), once I know the actual size of the reference. I tried to correct the angle/perpective based on the reference and I used the reference mask:

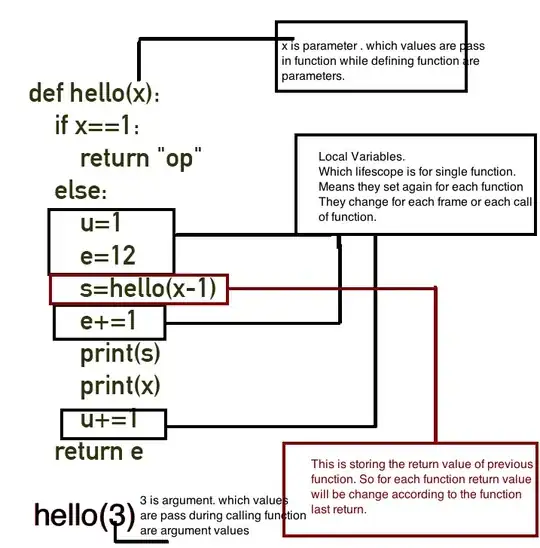

I tried to calculate quadrangle vertices based on the reference mask to correct the perspective. I created this code based on this reference Perspective correction in OpenCV using python:

# import the necessary packages

from scipy.spatial import distance as dist

from imutils import perspective

from imutils import contours

import numpy as np

import imutils

import cv2

import math

import matplotlib.pyplot as plt

# get the single external contours

# load the image, convert it to grayscale, and blur it slightly

image = cv2.imread("./ref/20210702_114527.png") ## Mask Image

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

gray = cv2.GaussianBlur(gray, (7, 7), 0)

# perform edge detection, then perform a dilation + erosion to

# close gaps in between object edges

edged = cv2.Canny(gray, 50, 100)

edged = cv2.dilate(edged, None, iterations=1)

edged = cv2.erode(edged, None, iterations=1)

# find contours in the edge map

cnts = cv2.findContours(edged.copy(), cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)

cnts = imutils.grab_contours(cnts)

# sort the contours from left-to-right and initialize the

# 'pixels per metric' calibration variable

(cnts, _) = contours.sort_contours(cnts)

pixelsPerMetric = None

orig = image.copy()

box = cv2.minAreaRect(min(cnts, key=cv2.contourArea))

box = cv2.cv.BoxPoints(box) if imutils.is_cv2() else cv2.boxPoints(box)

box = np.array(box, dtype="int")

# order the points in the contour such that they appear

# in top-left, top-right, bottom-right, and bottom-left

# order, then draw the outline of the rotated bounding

# box

box = perspective.order_points(box)

cv2.drawContours(orig, [box.astype("int")], -1, (0, 255, 0), 2)

# loop over the original points and draw them

for (x, y) in box:

cv2.circle(orig, (int(x), int(y)), 5, (0, 0, 255), -1)

print('Box: ',box)

cv2.imshow('Orig', orig)

img = cv2.imread("./meat/sair/20210702_114527.jpg") #original image

rows,cols,ch = img.shape

#pts1 = np.float32([[185,9],[304,80],[290, 134],[163,64]]) #ficou legal 6e.jpg

### Coletando os pontos

pts1 = np.float32(box)

### Draw the vertices on the original image

for (x, y) in pts1:

cv2.circle(img, (int(x), int(y)), 5, (0, 0, 255), -1)

ratio= 1.6

moldH=math.sqrt((pts1[2][0]-pts1[1][0])*(pts1[2][0]-pts1[1][0])+(pts1[2][1]-pts1[1][1])*(pts1[2][1]-pts1[1][1]))

moldW=ratio*moldH

pts2 = np.float32([[pts1[0][0],pts1[0][1]], [pts1[0][0]+moldW, pts1[0][1]], [pts1[0][0]+moldW, pts1[0][1]+moldH], [pts1[0][0], pts1[0][1]+moldH]])

#print('cardH: ',cardH,cardW)

M = cv2.getPerspectiveTransform(pts1,pts2)

print('M:', M)

print('pts1:', pts1)

print('pts2:', pts2)

offsetSize= 320

transformed = np.zeros((int(moldW+offsetSize), int(moldH+offsetSize)), dtype=np.uint8)

dst = cv2.warpPerspective(img, M, transformed.shape)

plt.subplot(121),plt.imshow(img),plt.title('Input')

plt.subplot(122),plt.imshow(dst),plt.title('Output')

plt.show()

And I got this:

No perspective correction. I have a lot of information like vertices, the correct size of the reference. Is it possible to do a mathematical correction based on quadrangle vertices, like a regression? Not necessarily correcting the image directly, unless there is a good method to correct the perspective image. Or maybe a different approach based on math? Thanks for your patience.

For Christoph:

This is the result position too:

pts1: [[ 9. 51.]

[392. 56.]

[388. 336.]

[ 5. 331.]]