I have a code to collect all of the URLs from the "oddsportal" website for a page:

from bs4 import BeautifulSoup

import requests

headers = {

'User-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.114 Safari/537.36'}

source = requests.get("https://www.oddsportal.com/soccer/africa/africa-cup-of-nations/results/",headers=headers)

soup = BeautifulSoup(source.text, 'html.parser')

main_div=soup.find("div",class_="main-menu2 main-menu-gray")

a_tag=main_div.find_all("a")

for i in a_tag:

print(i['href'])

which returns these results:

/soccer/africa/africa-cup-of-nations/results/

/soccer/africa/africa-cup-of-nations-2019/results/

/soccer/africa/africa-cup-of-nations-2017/results/

/soccer/africa/africa-cup-of-nations-2015/results/

/soccer/africa/africa-cup-of-nations-2013/results/

/soccer/africa/africa-cup-of-nations-2012/results/

/soccer/africa/africa-cup-of-nations-2010/results/

/soccer/africa/africa-cup-of-nations-2008/results/

I would like the URLs to be returned as:

https://www.oddsportal.com/soccer/africa/africa-cup-of-nations/results/

https://www.oddsportal.com/soccer/africa/africa-cup-of-nations/results/#/page/2/

https://www.oddsportal.com/soccer/africa/africa-cup-of-nations/results/#/page/3/

for all the parent urls generated for results.

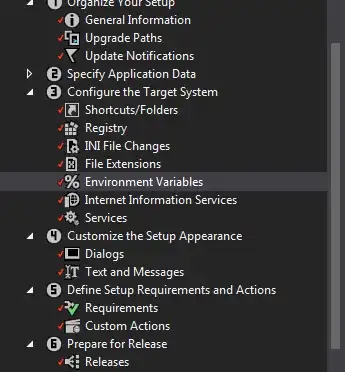

I can see that the urls can be appended as seen from inspect element as below for div id = "pagination"