One possible solution involves applying the approach in this post. It involves convolving the input image with a special kernel used to identify end-points. These are the steps:

- Converting the image to grayscale

- Getting a binary image by applying Otsu's thresholding to the grayscale image

- Applying a little bit of morphology, to ensure we have continuous and closed curves

- Compute the skeleton of the image

- Convolve the skeleton with the end-points kernel

- Draw the end-points on the original image

Let's see the code:

# Imports:

import cv2

import numpy as np

# Reading an image in default mode:

inputImage = cv2.imread(path + fileName)

# Prepare a deep copy of the input for results:

inputImageCopy = inputImage.copy()

# Grayscale conversion:

grayscaleImage = cv2.cvtColor(inputImage, cv2.COLOR_BGR2GRAY)

# Threshold via Otsu:

_, binaryImage = cv2.threshold(grayscaleImage, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)

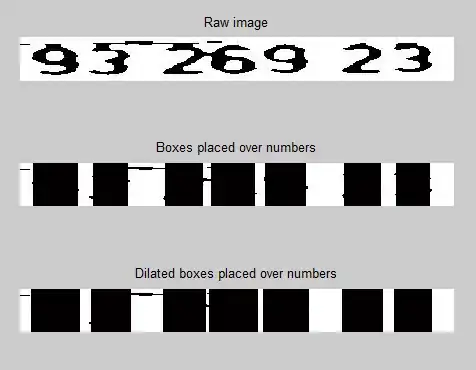

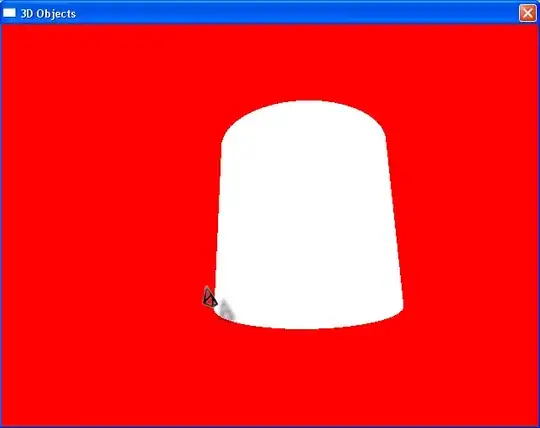

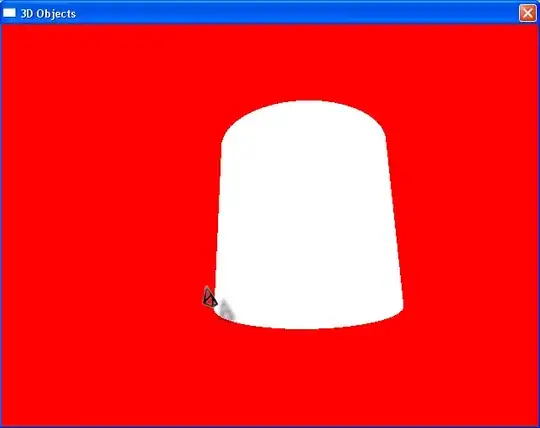

The first bit is very straightforward. Just get a binary image using Otsu's Thresholding. This is the result:

The thresholding could miss some pixels inside the curves, leading to "gaps". We don't want that, because we are trying to identify the end-points, which are essentially gaps on the curves. Let's fill possible gaps using a little bit of morphology - a closing will help fill those smaller gaps:

# Set morph operation iterations:

opIterations = 2

# Get the structuring element:

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5, 5))

# Perform Closing:

binaryImage = cv2.morphologyEx(binaryImage, cv2.MORPH_CLOSE, kernel, None, None, opIterations, cv2.BORDER_REFLECT101)

This is now the result:

Ok, what follows is getting the skeleton of the binary image. The skeleton is a version of the binary image where lines have been normalized to have a width of 1 pixel. This is useful because we can then convolve the image with a 3 x 3 kernel and look for specific pixel patterns - those that identify a end-point. Let's compute the skeleton using OpenCV's extended image processing module:

# Compute the skeleton:

skeleton = cv2.ximgproc.thinning(binaryImage, None, 1)

Nothing fancy, the thing is done in just one line of code. The result is this:

It is very subtle with this image, but the curves have now 1 px of width, so we can apply the convolution. The main idea of this approach is that the convolution yields a very specific value where patterns of black and white pixels are found in the input image. The value we are looking for is 110, but we need to perform some operations before the actual convolution. Refer to the original post for details. These are the operations:

# Threshold the image so that white pixels get a value of 0 and

# black pixels a value of 10:

_, binaryImage = cv2.threshold(skeleton, 128, 10, cv2.THRESH_BINARY)

# Set the end-points kernel:

h = np.array([[1, 1, 1],

[1, 10, 1],

[1, 1, 1]])

# Convolve the image with the kernel:

imgFiltered = cv2.filter2D(binaryImage, -1, h)

# Extract only the end-points pixels, those with

# an intensity value of 110:

endPointsMask = np.where(imgFiltered == 110, 255, 0)

# The above operation converted the image to 32-bit float,

# convert back to 8-bit uint

endPointsMask = endPointsMask.astype(np.uint8)

If we imshow the endPointsMask, we would get something like this:

In the above image, you can see the location of the identified end-points. Let's get the coordinates of these white pixels:

# Get the coordinates of the end-points:

(Y, X) = np.where(endPointsMask == 255)

Finally, let's draw circles on these locations:

# Draw the end-points:

for i in range(len(X)):

# Get coordinates:

x = X[i]

y = Y[i]

# Set circle color:

color = (0, 0, 255)

# Draw Circle

cv2.circle(inputImageCopy, (x, y), 3, color, -1)

cv2.imshow("Points", inputImageCopy)

cv2.waitKey(0)

This is the final result:

EDIT: Identifying which blob produces each set of points

Since you need to also know which blob/contour/curve produced each set of end-points, you can re-work the code below with some other functions to achieve just that. Here, I'll mainly rely on a previous function I wrote that is used to detect the biggest blob in an image. One of the two curves will always be bigger (i.e., have a larger area) than the other. If you extract this curve, process it, and then subtract it from the original image iteratively, you could process curve by curve, and each time you could know which curve (the current biggest one) produced the current end-points. Let's modify the code to implement these ideas:

# Imports:

import cv2

import numpy as np

# image path

path = "D://opencvImages//"

fileName = "w97nr.jpg"

# Reading an image in default mode:

inputImage = cv2.imread(path + fileName)

# Deep copy for results:

inputImageCopy = inputImage.copy()

# Grayscale conversion:

grayscaleImage = cv2.cvtColor(inputImage, cv2.COLOR_BGR2GRAY)

# Threshold via Otsu:

_, binaryImage = cv2.threshold(grayscaleImage, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)

# Set morph operation iterations:

opIterations = 2

# Get the structuring element:

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5, 5))

# Perform Closing:

binaryImage = cv2.morphologyEx(binaryImage, cv2.MORPH_CLOSE, kernel, None, None, opIterations, cv2.BORDER_REFLECT101)

# Compute the skeleton:

skeleton = cv2.ximgproc.thinning(binaryImage, None, 1)

Up until the skeleton computation, everything is the same. Now, we will extract the current biggest blob and process it to obtain its end-points, we will continue extracting the current biggest blob until there are no more curves to extract. So, we just modify the prior code to manage the iterative nature of this idea. Additionaly, let's store the end-points on a list. Each row of this list will denote a new curve:

# Processing flag:

processBlobs = True

# Shallow copy for processing loop:

blobsImage = skeleton

# Store points per blob here:

blobPoints = []

# Count the number of processed blobs:

blobCounter = 0

# Start processing blobs:

while processBlobs:

# Find biggest blob on image:

biggestBlob = findBiggestBlob(blobsImage)

# Prepare image for next iteration, remove

# currrently processed blob:

blobsImage = cv2.bitwise_xor(blobsImage, biggestBlob)

# Count number of white pixels:

whitePixelsCount = cv2.countNonZero(blobsImage)

# If the image is completely black (no white pixels)

# there are no more curves to process:

if whitePixelsCount == 0:

processBlobs = False

# Threshold the image so that white pixels get a value of 0 and

# black pixels a value of 10:

_, binaryImage = cv2.threshold(biggestBlob, 128, 10, cv2.THRESH_BINARY)

# Set the end-points kernel:

h = np.array([[1, 1, 1],

[1, 10, 1],

[1, 1, 1]])

# Convolve the image with the kernel:

imgFiltered = cv2.filter2D(binaryImage, -1, h)

# Extract only the end-points pixels, those with

# an intensity value of 110:

endPointsMask = np.where(imgFiltered == 110, 255, 0)

# The above operation converted the image to 32-bit float,

# convert back to 8-bit uint

endPointsMask = endPointsMask.astype(np.uint8)

# Get the coordinates of the end-points:

(Y, X) = np.where(endPointsMask == 255)

# Prepare random color:

color = (np.random.randint(low=0, high=256), np.random.randint(low=0, high=256), np.random.randint(low=0, high=256))

# Prepare id string:

string = "Blob: "+str(blobCounter)

font = cv2.FONT_HERSHEY_COMPLEX

tx = 10

ty = 10 + 10 * blobCounter

cv2.putText(inputImageCopy, string, (tx, ty), font, 0.3, color, 1)

# Store these points in list:

blobPoints.append((X,Y, blobCounter))

blobCounter = blobCounter + 1

# Draw the end-points:

for i in range(len(X)):

x = X[i]

y = Y[i]

cv2.circle(inputImageCopy, (x, y), 3, color, -1)

cv2.imshow("Points", inputImageCopy)

cv2.waitKey(0)

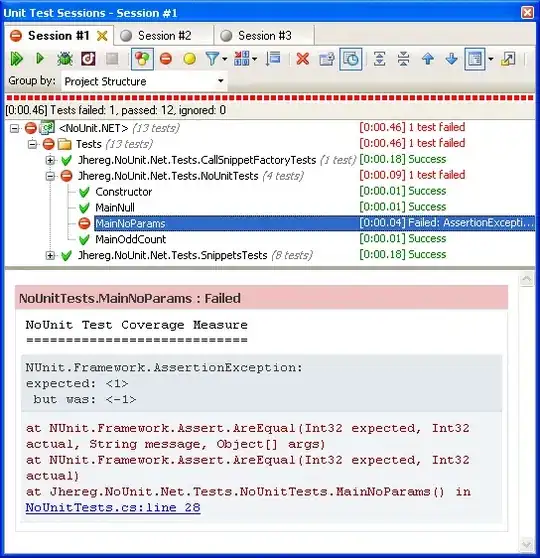

This loop extracts the biggest blob and processes it just like in the first part of the post - we convolve the image with the end-point kernel and locate the matching points. For the original input, this would be the result:

As you see, each set of points is drawn using one unique color (randomly generated). There's also the current blob "ID" (just an ascending count) drawn in text with the same color as each set of points, so you know which blob produced each set of end-points. The info is stored in the blobPoints list, we can print its values, like this:

# How many blobs where found:

blobCount = len(blobPoints)

print("Found: "+str(blobCount)+" blobs.")

# Let's check out each blob and their end-points:

for b in range(blobCount):

# Fetch data:

p1 = blobPoints[b][0]

p2 = blobPoints[b][1]

id = blobPoints[b][2]

# Print data for each blob:

print("Blob: "+str(b)+" p1: "+str(p1)+" p2: "+str(p2)+" id: "+str(id))

Which prints:

Found: 2 blobs.

Blob: 0 p1: [39 66] p2: [ 42 104] id: 0

Blob: 1 p1: [129 119] p2: [25 49] id: 1

This is the implementation of the findBiggestBlob function, which just computes the biggest blob on the image using its area. It returns an image of the biggest blob isolated, this comes from a C++ implementation I wrote of the same idea:

def findBiggestBlob(inputImage):

# Store a copy of the input image:

biggestBlob = inputImage.copy()

# Set initial values for the

# largest contour:

largestArea = 0

largestContourIndex = 0

# Find the contours on the binary image:

contours, hierarchy = cv2.findContours(inputImage, cv2.RETR_CCOMP, cv2.CHAIN_APPROX_SIMPLE)

# Get the largest contour in the contours list:

for i, cc in enumerate(contours):

# Find the area of the contour:

area = cv2.contourArea(cc)

# Store the index of the largest contour:

if area > largestArea:

largestArea = area

largestContourIndex = i

# Once we get the biggest blob, paint it black:

tempMat = inputImage.copy()

cv2.drawContours(tempMat, contours, largestContourIndex, (0, 0, 0), -1, 8, hierarchy)

# Erase smaller blobs:

biggestBlob = biggestBlob - tempMat

return biggestBlob