I'm looking to understanding the relationship of

container_memory_working_set_bytes vs process_resident_memory_bytes vs total_rss (container_memory_rss) + file_mapped so as to better equipped system for alerting on OOM possibility.

It seems against my understanding (which is puzzling me right now) given if a container/pod is running a single process executing a compiled program written in Go.

Why is the difference between container_memory_working_set_bytes is so big(nearly 10 times more) with respect to process_resident_memory_bytes

Also the relationship between container_memory_working_set_bytes and container_memory_rss + file_mapped is weird here, something I did not expect, after reading here

The total amount of anonymous and swap cache memory (it includes transparent hugepages), and it equals to the value of total_rss from memory.status file. This should not be confused with the true resident set size or the amount of physical memory used by the cgroup. rss + file_mapped will give you the resident set size of cgroup. It does not include memory that is swapped out. It does include memory from shared libraries as long as the pages from those libraries are actually in memory. It does include all stack and heap memory.

So cgroup total resident set size is rss + file_mapped how does this value is less than container_working_set_bytes for a container that is running in the given cgroup

Which make me feels something with this stats that I'm not correct.

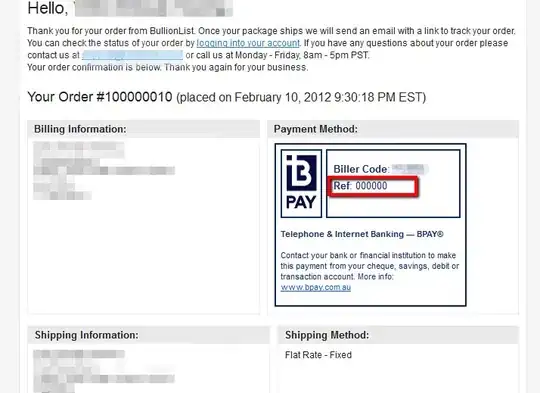

Following are the PROMQL used to build the above graph

- process_resident_memory_bytes{container="sftp-downloader"}

- container_memory_working_set_bytes{container="sftp-downloader"}

- go_memstats_heap_alloc_bytes{container="sftp-downloader"}

- container_memory_mapped_file{container="sftp-downloader"} + container_memory_rss{container="sftp-downloader"}