I'm trying to calculate a weighted average for multiple columns in a dataframe. This is a sample of my data

| Group | Year | Month | Weight(kg) | Nitrogen | Calcium |

|---|---|---|---|---|---|

| A | 2020 | 01 | 10000 | 10 | 70 |

| A | 2020 | 01 | 15000 | 4 | 78 |

| A | 2021 | 05 | 12000 | 5 | 66 |

| A | 2021 | 05 | 10000 | 8 | 54 |

| B | 2021 | 08 | 14000 | 10 | 90 |

| C | 2021 | 08 | 50000 | 20 | 92 |

| C | 2021 | 08 | 40000 | 10 | 95 |

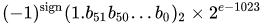

My desired result would look something like this:

What I've tried: I can get the correct weighted average values for a single column using this function: (similar to: link)

def wavg(df, value, weight):

d = df[value]

w = df[weight]

try:

return (d * w).sum() / w.sum()

except ZeroDivisionError:

return d.mean()

I can apply this function to a single column of my df:

df2 = df.groupby(["Group", "year", "month"]).apply(wavg, "Calcium", "Weight(kg").to_frame()

(Don't mind the different values, they are correct for the data in my notebook)

The obvious problem is that this function only works for a single column whilst I have a douzens of columns. I therefore tried a for loop:

column_list=[]

for column in df.columns:

column_list.append(df.groupby(["Group", "year", "month"]).apply(wavg, column, "Weight(kg").to_frame())

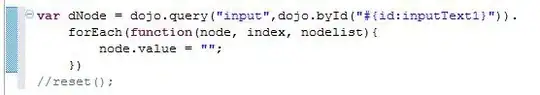

It calculates the values correctly, but the columns are placed on top of each other instead of next to eachother. They also miss a usefull column name:

How could I adapt my code to return the desired df?