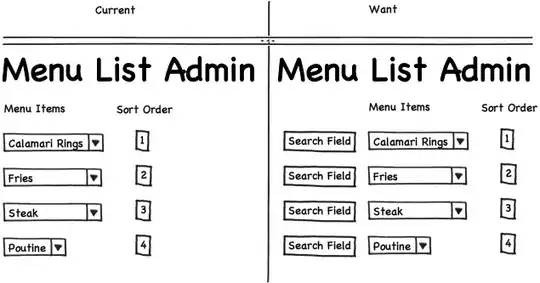

The use case of my project is to display each author's commit with the size of each commit per day. This is how I need to represent my data

For this what I did is

timed_commits = commit_data.set_index('Date')

grouped = timed_commits.groupby(by=["Author"])

resampled = grouped.resample("D").agg(

{"SHA": "size", "Insertion": "sum", "Deletion": "sum"}

) # get the total count of commits in a day with total insertion and deletion

this gave me the output as the below one

Here Author and Date are the index while SHA, Insertion and Deletion are the columns. The reason why Author and Date are index is I want to know the per day commits of each author while I want the size of each commit(through insertion) as well

For such object I could not able to format in this way or any other way(better to have field name for author value and date value) which would support to display in the table as in the image I attached for it

{

'author1': {

'2017-10-18': {'SHA': 1, 'Insertion': 1.0, 'Deletion': 3.0},

'2017-10-19': {'SHA': 2, 'Insertion': 1.0, 'Deletion': 3.0},

'2017-10-20': {'SHA': 6, 'Insertion': 1.0, 'Deletion': 3.0},

'2017-10-21': {'SHA': 9, 'Insertion': 1.0, 'Deletion': 3.0},

},

'author2': {

'2017-10-18': {'SHA': 3, 'Insertion': 8.0, 'Deletion': 3.0},

'2017-10-19': {'SHA': 19, 'Insertion': 10.0, 'Deletion': 3.0},

'2017-10-20': {'SHA': 23, 'Insertion': 1.0, 'Deletion': 3.0},

'2017-10-21': {'SHA': 44, 'Insertion': 1.0, 'Deletion': 3.0},

}

}

I played with to_dict but did not make it workable.

this is the dataframe(here commit hash i.e sha is repeated because of the number of files changed in that particular commit). This is taken from git logs.

SHA Timestamp Date Author Insertion Deletion Churn File path

1 cae635054 Sat Jun 26 14:51:23 2021 -0400 2021-06-26 18:51:23+00:00 Andrew Clark 31.0 0.0 31.0 packages/react-reconciler/src/__tests__/ReactI...

2 cae635054 Sat Jun 26 14:51:23 2021 -0400 2021-06-26 18:51:23+00:00 Andrew Clark 1.0 1.0 0.0 packages/react-test-renderer/src/ReactTestRend...

3 cae635054 Sat Jun 26 14:51:23 2021 -0400 2021-06-26 18:51:23+00:00 Andrew Clark 24.0 14.0 10.0 packages/react/src/ReactAct.js

5 e2453e200 Fri Jun 25 15:39:46 2021 -0400 2021-06-25 19:39:46+00:00 Andrew Clark 50.0 0.0 50.0 packages/react-reconciler/src/__tests__/ReactI...

7 73ffce1b6 Thu Jun 24 22:42:44 2021 -0400 2021-06-25 02:42:44+00:00 Brian Vaughn 4.0 5.0 -1.0 packages/react-devtools-shared/src/__tests__/F...

8 73ffce1b6 Thu Jun 24 22:42:44 2021 -0400 2021-06-25 02:42:44+00:00 Brian Vaughn 4.0 4.0 0.0 packages/react-devtools-shared/src/__tests__/c...

9 73ffce1b6 Thu Jun 24 22:42:44 2021 -0400 2021-06-25 02:42:44+00:00 Brian Vaughn 12.0 12.0 0.0 packages/react-devtools-shared/src/__tests__/c...

10 73ffce1b6 Thu Jun 24 22:42:44 2021 -0400 2021-06-25 02:42:44+00:00 Brian Vaughn 7.0 6.0 1.0 packages/react-devtools-shared/src/__tests__/e...

11 73ffce1b6 Thu Jun 24 22:42:44 2021 -0400 2021-06-25 02:42:44+00:00 Brian Vaughn 47.0 42.0 5.0 packages/react-devtools-shared/src/__tests__/i...

12 73ffce1b6 Thu Jun 24 22:42:44 2021 -0400 2021-06-25 02:42:44+00:00 Brian Vaughn 7.0 6.0 1.0 packages/react-devtools-shared/src/__tests__/o...