I have a 2D NumPy array containing binary values (0 and 1) +1 additional value (-999) representing "no data".

Here's a dummy example:

import numpy as np

arr = np.random.randint(2, size=(100,))

# this no-data value has to be large compared to the data range:

arr[np.random.randint(1, 100, 20)] = -999

arr = arr.astype(np.float32)

arr.reshape((10,10))

>> arr

array([[ 1, 1, 0, 1, 0, 0, -999, 1, 0, 0],

[ 0, 1, 0, 0, 1, 0, 1, 1, 1, 1],

[ 1, 1, 1, 1, -999, 0, 1, -999, 1, -999],

[ 0, 1, -999, 1, 0, 1, 1, 1, 0, 1],

[-999, -999, 1, 1, 1, 0, 0, 0, 0, 1],

[-999, 1, 1, -999, 0, 1, 1, -999, -999, 1],

[ 1, 1, 1, 0, 0, 1, 1, -999, -999, 0],

[-999, 0, 1, 0, -999, -999, 1, 1, 1, 1],

[ 1, 1, 0, 1, 1, 0, 0, 0, 1, 0],

[ 1, -999, -999, 0, 1, 0, 1, 0, 1, 1]],

dtype=float32)

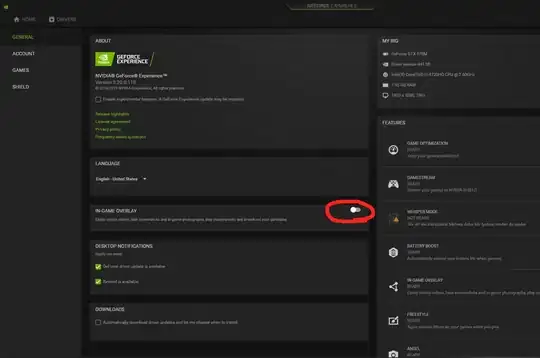

When I plot this array using matplotlib.imshow(), as 0 and 1 pixels are very close together compared to the whole data range, they have almost the same color, which makes them totally indistinguishable:

import matplotlib.pyplot as plt

plt.imshow(arr)

in yellow, the pixels with values of 0 and 1, and in dark purple the -999 pixels.

I'd like to map these pixel values to the following:

color_list = {-999: np.array([189, 184, 181]), # gray tone

0: np.array([0, 255, 0]), # green

1: np.array([255, 180, 180])} # light red

This seems to work:

data_3d = np.ndarray(shape=(arr.shape[0], arr.shape[1], 3), dtype=int)

for i in range(0, arr.shape[0]):

for j in range(0, arr.shape[1]):

data_3d[i][j] = color_map[arr[i][j]]

plt.imshow(data_3d)

The masked version is also working:

# Normalizing the color_dict is mandatory to avoid a ValueError:

# "RGBA values should be within 0-1 range"

color_dict = {-999: np.array([189, 184, 181])/.255, # gray tone

0: np.array([0, 255, 0])/.255, # green

1: np.array([255, 180, 180])/.255} # light red

mask = np.ma.masked_where(arr == -999, arr)

# Here the indices in square brackets are the actual values of the pixels

# which serves as keys in the color_dict:

# see https://stackoverflow.com/questions/26610389/defining-a-binary-matplotlib-colormap

customcm = mpl.colors.ListedColormap( [ color_list[0], color_list[1] ] )

customcm.set_bad( color_list[-999] )

plt.imshow(mask, cmap=customcm)

But I wonder if one can totally avoid the double for loop and/or the creation of a new array (including a mask) here?

For example, dynamically, by only using matplotlib, because some of my arrays are quite large (up to ~2GB) and I would like to keep the memory consumption as low as possible by avoiding building another array of the same size with up to 3 channels...

Versioning:

>>> numpy.__version__

'1.19.5'

>>> mpl.__version__

'3.3.4'