I have a hard time understanding how to combine a rule-based decision making approach for an agent in an agent-based model I try to develop.

The interface of the agent is a very simple one.

public interface IAgent

{

public string ID { get; }

public Action Percept(IPercept percept);

}

For the sake of the example, let's assume that the agents represent Vehicles which traverse roads inside a large warehouse, in order to load and unload their cargo. Their route (sequence of roads, from the start point until the agent's destination) is assigned by another agent, the Supervisor. The goal of a vehicle agent is to traverse its assigned route, unload the cargo, load a new one, receive another assigned route by the Supervisor and repeat the process.

The vehicles must also be aware of potential collisions, for example at intersection points, and give priority based on some rules (for example, the one carrying the heaviest cargo has priority).

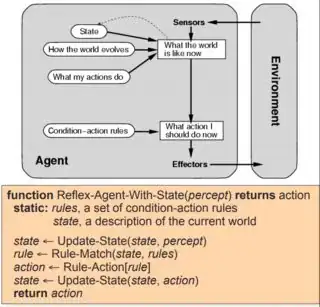

As far as I can understand, this is the internal structure of the agents I want to build:

So the Vehicle Agent can be something like:

public class Vehicle : IAgent

{

public VehicleStateUpdater { get; set; }

public RuleSet RuleSet { get; set; }

public VehicleState State { get; set; }

public Action Percept(IPercept percept)

{

VehicleStateUpdater.UpdateState(VehicleState, percept);

Rule validRule = RuleSet.Match(VehicleState);

VehicleStateUpdater.UpdateState(VehicleState, validRule);

Action nextAction = validRule.GetAction();

return nextAction;

}

}

For the Vehicle agent's internal state I was considering something like:

public class VehicleState

{

public Route Route { get; set; }

public Cargo Cargo { get; set; }

public Location CurrentLocation { get; set; }

}

For this example, 3 rules must be implemented for the Vehicle Agent.

- If another vehicle is near the agent (e.g. less than 50 meters), then the one with the heaviest cargo has priority, and the other agents must hold their position.

- When an agent reaches their destination, they unload the cargo, load a new one and wait for the Supervisor to assign a new route.

- At any given moment, the Supervisor, for whatever reason, might send a command, which the recipient vehicle must obey (Hold Position or Continue).

The VehicleStateUpdater must take into consideration the current state of the agent, the type of received percept and change the state accordingly. So, in order for the state to reflect that e.g. a command was received by the Supervisor, one can modify it as follows:

public class VehicleState

{

public Route Route { get; set; }

public Cargo Cargo { get; set; }

public Location CurrentLocation { get; set; }

// Additional Property

public RadioCommand ActiveCommand { get; set; }

}

Where RadioCommand can be an enumeration with values None, Hold, Continue.

But now I must also register in the agent's state if another vehicle is approaching. So I must add another property to the VehicleState.

public class VehicleState

{

public Route Route { get; set; }

public Cargo Cargo { get; set; }

public Location CurrentLocation { get; set; }

public RadioCommand ActiveCommand { get; set; }

// Additional properties

public bool IsAnotherVehicleApproaching { get; set; }

public Location ApproachingVehicleLocation { get; set; }

}

This is where I have a huge trouble understanding how to proceed and I get a feeling that I do not really follow the correct approach. First, I am not sure how to make the VehicleState class more modular and extensible. Second, I am not sure how to implement the rule-based part that defines the decision making process. Should I create mutually exclusive rules (which means every possible state must correspond to no more than one rule)? Is there a design approach that will allow me to add additional rules without having to go back-and-forth the VehicleState class and add/modify properties in order to make sure that every possible type of Percept can be handled by the agent's internal state?

I have seen the examples demonstrated in the Artificial Intelligence: A Modern Approach coursebook and other sources but the available examples are too simple for me to "grasp" the concept in question when a more complex model must be designed.

I would be grateful if someone can point me in the right direction concerning the implementation of the rule-based part.

I am writing in C# but as far as I can tell it is not really relevant to the broader issue I am trying to solve.

UPDATE:

An example of a rule I tried to incorporate:

public class HoldPositionCommandRule : IAgentRule<VehicleState>

{

public int Priority { get; } = 0;

public bool ConcludesTurn { get; } = false;

public void Fire(IAgent agent, VehicleState state, IActionScheduler actionScheduler)

{

state.Navigator.IsMoving = false;

//Use action scheduler to schedule subsequent actions...

}

public bool IsValid(VehicleState state)

{

bool isValid = state.RadioCommandHandler.HasBeenOrderedToHoldPosition;

return isValid;

}

}

A sample of the agent decision maker that I also tried to implement.

public void Execute(IAgentMessage message,

IActionScheduler actionScheduler)

{

_agentStateUpdater.Update(_state, message);

Option<IAgentRule<TState>> validRule = _ruleMatcher.Match(_state);

validRule.MatchSome(rule => rule.Fire(this, _state, actionScheduler));

}