I have the following code portion:

dataset = trainDataset()

train_loader = DataLoader(dataset,batch_size=1,shuffle=True)

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

images = []

image_labels = []

for i, data in enumerate(train_loader,0):

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

inputs, labels = inputs.float(), labels.float()

images.append(inputs)

image_labels.append(labels)

image = images[7]

image = image.numpy()

image = image.reshape(416,416,3)

img = Image.fromarray(image,'RGB')

img.show()

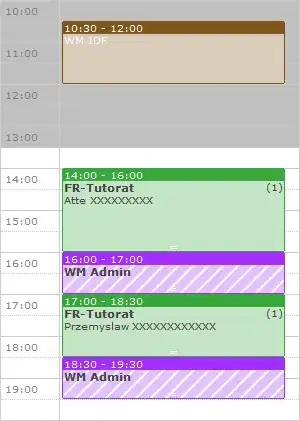

The issue is that the image doesn't display properly. For instance, the dataset I have contains images of cats and dogs. But, the image displayed looks as shown below. Why is that?

EDIT 1

So, after @flawr's nice explanation, I have the following:

image = images[7]

image = image[0,...].permute([1,2,0])

image = image.numpy()

img = Image.fromarray(image,'RGB')

img.show()

And, the image looks as shown below. Not sure if it is a Numpy thing or the way the image is represented and displayed? I would like to also kindly note that I get a different display of the image at every run, but it is pretty much something close to the image displayed below.

EDIT 2

I think the issue now is with how to represent the image. By referring to this solution, I now get the following:

image = images[7]

image = image[0,...].permute([1,2,0])

image = image.numpy()

image = (image * 255).astype(np.uint8)

img = Image.fromarray(image,'RGB')

img.show()

Which produces the following image as expected :-)