Just to be clear, I am not interested in adding a constant packet drop on a link (as described by this Stack Overflow question). I want to observe packet drop taking place naturally in the network due to congestion.

The intention of my project is to observe the packet drop and delay taking place in a network (preferably an SDN) by varying the qdisc buffer size on the router node. I have a basic topology of three nodes h1, h2 and h3 connected to a router r. I am conducting my experiment along the lines of this tutorial taking place inside a custom environment. My code is shown below:

DELAY='110ms' # r--h3 link

BBR=False

import sys

import shelve

import os

import re

import numpy as np

import matplotlib.pyplot as plt

from mininet.term import makeTerm

from mininet.net import Mininet

from mininet.node import Node, OVSKernelSwitch, Controller, RemoteController

from mininet.cli import CLI

from mininet.link import TCLink

from mininet.topo import Topo

from mininet.log import setLogLevel, info

import time

class LinuxRouter( Node ):

"A Node with IP forwarding enabled."

def config( self, **params ):

super( LinuxRouter, self).config( **params )

# Enable forwarding on the router

info ('enabling forwarding on ', self)

self.cmd( 'sysctl net.ipv4.ip_forward=1' )

def terminate( self ):

self.cmd( 'sysctl net.ipv4.ip_forward=0' )

super( LinuxRouter, self ).terminate()

class RTopo(Topo):

def build(self, **_opts):

defaultIP = '10.0.1.1/24' # IP address for r0-eth1

r = self.addNode( 'r', cls=LinuxRouter) # , ip=defaultIP )

h1 = self.addHost( 'h1', ip='10.0.1.10/24', defaultRoute='via 10.0.1.1' )

h2 = self.addHost( 'h2', ip='10.0.2.10/24', defaultRoute='via 10.0.2.1' )

h3 = self.addHost( 'h3', ip='10.0.3.10/24', defaultRoute='via 10.0.3.1' )

self.addLink( h1, r, intfName1 = 'h1-eth', intfName2 = 'r-eth1', bw=80,

params2 = {'ip' : '10.0.1.1/24'})

self.addLink( h2, r, intfName1 = 'h2-eth', intfName2 = 'r-eth2', bw=80,

params2 = {'ip' : '10.0.2.1/24'})

.

self.addLink( h3, r, intfName1 = 'h3-eth', intfName2 = 'r-eth3',

params2 = {'ip' : '10.0.3.1/24'},

delay=DELAY, queue=QUEUE) # apparently queue is IGNORED here.

def main():

rtopo = RTopo()

net = Mininet(topo = rtopo,

link=TCLink,

#switch = OVSKernelSwitch,

# ~ controller = RemoteController,

autoSetMacs = True # --mac

)

net.start()

r = net['r']

r.cmd('ip route list');

# r's IPv4 addresses are set here, not above.

r.cmd('ifconfig r-eth1 10.0.1.1/24')

r.cmd('ifconfig r-eth2 10.0.2.1/24')

r.cmd('ifconfig r-eth3 10.0.3.1/24')

r.cmd('sysctl net.ipv4.ip_forward=1')

h1 = net['h1']

h2 = net['h2']

h3 = net['h3']

h3.cmdPrint("iperf -s -u -i 1 &")

r.cmdPrint("tc qdisc del dev r-eth3 root")

bsizes = []

bsizes.extend(["1000mb","10mb","5mb","1mb","200kb"])

bsizes.extend(["100kb","50kb","10kb","5kb","1kb","100b"])

pdrops = []

delays = []

init = 1

pdrop_re = re.compile(r'(\d+)% packet loss')

delay_re = re.compile(r'rtt min/avg/max/mdev = (\d+).(\d+)/(\d+).(\d+)/(\d+).(\d+)/(\d+).(\d+) ms')

bsizes.reverse()

for bsize in bsizes:

if init:

init = 0

routercmd = "sudo tc qdisc add dev r-eth3 root tbf rate 18mbit limit {} burst 10kb".format(bsize)

else:

routercmd = "sudo tc qdisc replace dev r-eth3 root tbf rate 18mbit limit {} burst 10kb".format(bsize)

r.cmdPrint(routercmd)

h1.cmd("iperf -c 10.0.3.10 -u -b 20mb -t 30 -i 1 >>a1.txt &")

h2.cmd("ping 10.0.3.10 -c 30 >> a2.txt")

print("Sleeping 30 seconds")

time.sleep(30)

#Below is the code to analyse delay and packet dropdata

f1 = open("a2.txt",'r')

s = f1.read()

f1.close()

l1 = pdrop_re.findall(s)

pdrop = l1[-1][0]

pdrops.append(int(pdrop))

print("Packet Drop = {}%".format(pdrop))

l2 = delay_re.findall(s)

delay = l2[-1][4] + '.' + l2[-1][5]

delays.append(float(delay))

print("Delay = {} ms".format(delay))

bsizes = np.array(bsizes)

delays = np.array(delays)

pdrops = np.array(pdrops)

plt.figure(0)

plt.plot(bsizes,delays)

plt.title("Delay")

plt.savefig("delay.png")

plt.show()

plt.figure(1)

plt.plot(bsizes,pdrops,'r')

plt.title("Packet Drop %")

plt.savefig("pdrop.png")

plt.show()

for h in [r, h1, h2, h3]: h.cmd('/usr/sbin/sshd')

CLI( net )

net.stop()

setLogLevel('info')

main()

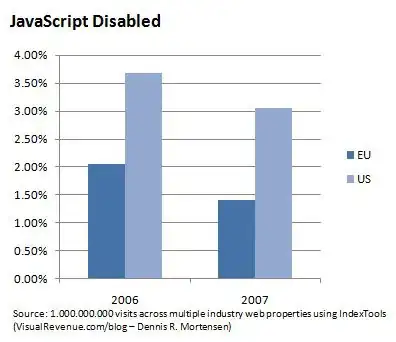

However, when I run the program, only the delay increases with queue/buffer size as expected. The packet drop stays constant (apart from the initial 3% packet drop that occurs regardless of the queue size used). I am flummoxed by this, since theoretically, as buffer size decreases, the space to 'store' a packet on the queue decreases, therefore the chances of a packet getting dropped should increase, as per the tutorial. The graphs are shown below:

Graph depicting an increase in delay:

Graph depicting a stagnant packet drop:

I need an explanation to this contrary behaviour. I would also appreciate a way to observe packet drop in my example. Could it have something to do with Mininet/SDNs in general prioritising ICMP over UDP packets, leading to a lack of packet drop? Or could it have something to do with controllers(I am using the default OpenFlow controller)?