Can you think of a faster algorithm for this problem? Or improve the code?

Problem:

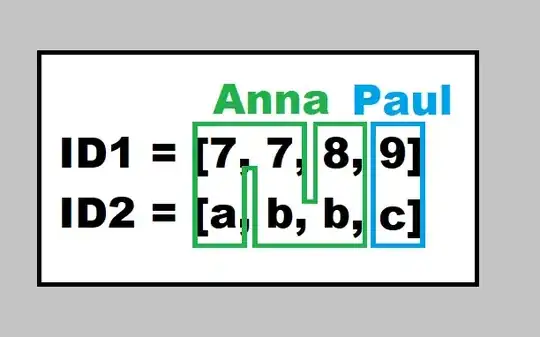

I have two customer IDs:

- ID1 (e.g. phone number)

- ID2 (e.g. email address)

A user sometimes change their ID1 and sometimes ID2. How can I find unique users?

Example:

ID1 = [7, 7, 8, 9]

ID2 = [a, b, b, c]

Desired result:

ID3 = [Anna, Anna, Anna, Paul]

The real world scenario has ca. 600 000 items per list.

There is already an SQL idea here: How can I match Employee IDs from 2 columns and group them into an array?

And I got help from a friend which had this idea with TypeScript: https://stackblitz.com/edit/typescript-leet-rewkmh?file=index.ts

A second friend of mine helped me with some pseudo-code, and I was able to create this:

Fastest (not working anymore) code so far:

ID1 = [7, 7, 8, 9]

ID2 = ["a", "b", "b", "c"]

def timeit_function(ID1, ID2):

def find_user_addresses():

phone_i = []

email_i = []

tmp1 = [ID1[0]]

tmp2 = []

tmp_index = []

while len(tmp1) != 0 or len(tmp2) != 0:

while len(tmp1) != 0:

tmp_index = []

for index, value in enumerate(ID1):

if value == tmp1[0]:

tmp2.append(ID2[index])

tmp_index.insert(-1, index)

for i in tmp_index:

del ID1[i]

del ID2[i]

tmp1 = list(dict.fromkeys(tmp1))

phone_i.append(tmp1.pop(0))

while len(tmp2) != 0:

tmp_index = []

for index, value in enumerate(ID2):

if value == tmp2[0]:

tmp1.append(ID1[index])

tmp_index.insert(0, index)

for i in tmp_index:

del ID1[i]

del ID2[i]

tmp2 = list(dict.fromkeys(tmp2))

email_i.append(tmp2.pop(0))

return phone_i, email_i

users = {}

i = 0

while len(ID1) != 0:

phone_i, email_i = find_user_addresses()

users[i] = [phone_i, email_i]

i += 1

return users

Output:

{0: [[7, 8], ['a', 'b']], 1: [[9], ['c']]}

Meaning: {User_0: [[phone1, phone2], [email1, email2]], User_1: [phone3, email3]}

Ranking

| rank | Username | %timeit | Unique users | Correct output? |

|---|---|---|---|---|

| 1. | Zachary Vance | 32 ms ± 1.9 ms per loop (mean ± std. dev. of 7 runs, 10 loops each) | 1408 | yes |

| 2. | igrinis | 5.54 s ± 81.7 ms per loop (mean ± std. dev. of 7 runs, 1 loop each) | 1408 | yes |

| (3.) | dkapitan | 8 s ± 106 ms per loop (mean ± std. dev. of 7 runs, 1 loop each) | 3606 | no |

| (4.) | thenarfer | 2.34 µs ± 3.25 µs per loop (mean ± std. dev. of 7 runs, 1 loop each) | 1494 | no |

The code was run with the two lists here (takes some time to load).