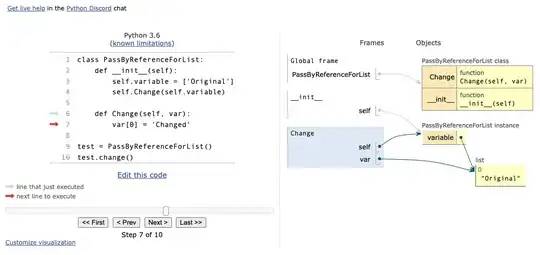

I used Keras in Python to create a regression model for deep learning. After validation by cross-validation with k=5, train_loss averaged around 0.10 over 5 trials and validation_loss averaged around 0.12.

I thought it would be natural that validation_loss would be larger due to outliers in the data (or values that are just at the upper or lower limits).

However, I don't know how much validation_loss should deviate from train_loss to be considered as overfitting.

If you have any ideas on the criteria for determining whether or not there is overlearning, what else I should check, or if I have a fundamental difference in thinking, could you tell me please.

Here is example figure about loss value. (showing 2 figures as examples from 5 figures)