I have recently deployed a Django backend application to AWS Lambda using Zappa.

After the lambda function has not been invoked for some time, the first request to be made takes from 10 to 15 seconds to be processed. At first I thought it would be because of the cold start but even for a cold start this time is unacceptable. Then, reading through Zappa's documentation I saw that it enables by default the keep_warm feature that sends a dummy request to the lambda function every 4 minutes to keep it warm; so this excessive delay in the response to the first request to the lambda is not due to a cold start.

Then, I started using tools such as AWS X-Ray and Cloudwatch Insights to try to find the explanation for the delay. Here is what I found out:

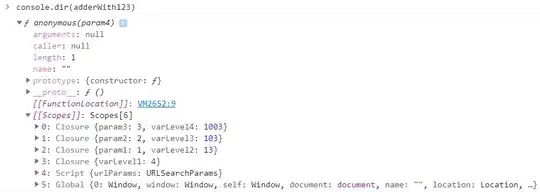

The invokation that takes a very long time to be processed is the following:

Crossed out in red are the names of the environment variables the application uses. They are all defined and assigned a value directly in the AWS Console. What I don't understand is, first of all, why it takes so long, and secondly, why it says the environment variables are casted as None. The application works perfectly (apart from the massive delay in the first request) so the environment variables are correctly set somewhere.

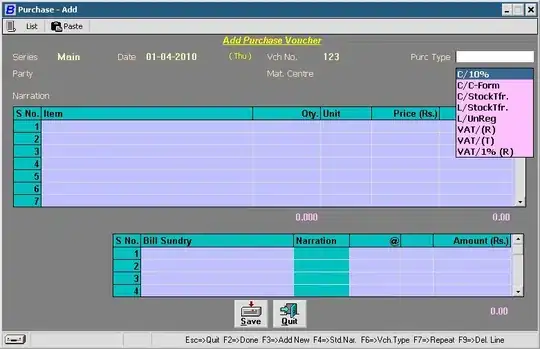

This request is made every two hours religiously and the first time someone invokes the lambda function in some time, as seen in the following chart:

The dots in the x axis correspond to Zappa's dummy requests to keep the server warm. The elevated dots correspond to the invocation shown in the previous image. Finally, the spike corresponds to a user invocation. The time it took to process is the sum of the time it takes to process the long invocation (the one shown in the first image) and the time it takes to process the longest http request the client makes to the server. This request was the following:

It was a regular login request that should be resolved much faster. Other requests that are probably more demanding than this one were resolved in less than 100ms.

So, to sum up:

- There is one lambda invocation that takes more than 10 seconds to be resolved. This corresponds to the first image shown. It is done every 2 hours and when a user makes a request to the server after it has been idle for some time.

- Some requests take more than 2 seconds to be resolved and I have no idea as to why this could be.

- Apart from these previous function invocations, all other requests are resolved in a reasonable time frame.

Any ideas as to why these invocations could be taking so much time is very much appreciated as I have spent quite some time trying to figure it out on my own and I have ran out of ideas. Thank you in advance!

Edit 1 (28/07/21): to further support my suspicion that this delay is not due to a cold start here is the "Segments Timeline" of the function in Cloudwatch/Application monitoring/Traces:

If it were a cold start, the delay should appear in the "Initialization" segment and not in the "Invocation" one.

Edit 2 (30/07/21): I forgot to mention that I had previously deployed the application using Elastic Beanstalk and didn't face this problem whatsoever so my code's performance is probably not the problem here.

Edit 3 (30/07/21): I found this thread in an AWS forum from 2016 regarding this exact issue. An AWS engineer mentioned that this behaviour is not by any means expected for a Lambda function outside of a VPC (like mine). Nevertheless, no answer was provided that explained the cause of the 10-15 seconds delay.

Edit 4 (03/08/21): I tried doubling the function's assigned memory (from 512 MB to 1024 MB) but it did not help.