I have a one column dataframe I ingested from a text file. So from this:

oneColDF = (spark.read

.format("text")

.load(file_path))

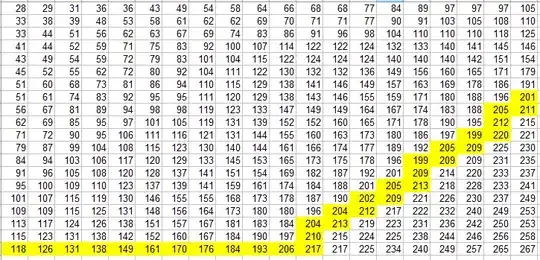

display(oneColDF)

, leading to this df:

with a hundred or so rows. There is no dependable delimiter in this format (for instance, a space won't work because some fields have spaces within), however, the columns are fixed-width, so i know column name and width for each field (all fields are strings). I know this because I was provided with dictionary:

fixed_width_column_defs = {

"submitted_at": (1, 15),

"order_id": (16, 40),

"customer_id": (56, 40),

"sales_rep_id": (96, 40),

"sales_rep_ssn": (136, 15),

"sales_rep_first_name": (151, 15),

"sales_rep_last_name": (166, 15),

"sales_rep_address": (181, 40),

"sales_rep_city": (221, 20),

"sales_rep_state": (241, 2),

"sales_rep_zip": (243, 5),

"shipping_address_attention": (248, 30),

"shipping_address_address": (278, 40),

"shipping_address_city": (318, 20),

"shipping_address_state": (338, 2),

"shipping_address_zip": (340, 5),

"product_id": (345, 40),

"product_quantity": (385, 5),

"product_sold_price": (390, 20)

}

So I can add empty columns like this:

multiColDF= oneColDF.withColumn('submitted_at',

lit(None).cast(StringType())).withColumn('order_id', lit(None).cast(StringType())).withColumn('customer_id', lit(None).cast(StringType())).withColumn('sales_rep_id', lit(None).cast(StringType())).withColumn('sales_rep_ssn', lit(None).cast(StringType())).withColumn('sales_rep_first_name', lit(None).cast(StringType())).withColumn('sales_rep_last_name', lit(None).cast(StringType())).withColumn('sales_rep_address', lit(None).cast(StringType())).withColumn('sales_rep_city', lit(None).cast(StringType())).withColumn('sales_rep_state', lit(None).cast(StringType())).withColumn('sales_rep_zip', lit(None).cast(StringType())).withColumn('shipping_address_attention', lit(None).cast(StringType())).withColumn('shipping_address_address', lit(None).cast(StringType())).withColumn('shipping_address_city', lit(None).cast(StringType())).withColumn('shipping_address_state', lit(None).cast(StringType())).withColumn('shipping_address_zip', lit(None).cast(StringType())).withColumn('product_id', lit(None).cast(StringType())).withColumn('product_quantity', lit(None).cast(StringType())).withColumn('product_sold_price', lit(None).cast(StringType()))

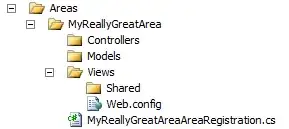

, so now the dataframe has all the columns:

So I am trying to figure out how to loop through the dataframe to update all the columns with the appropriate data from the value column.

for row in multiColDF.rdd.collect():

addTheDatafromValColToEachCol()

I am stuck at this point. I would be grateful for any ideas, either building on what i have done or perhaps a simpler solution. Thank you.