I was using Document OCR API to extract text from a pdf file, but part of it is not accurate. I found that the reason may be due to the existence of some Chinese characters.

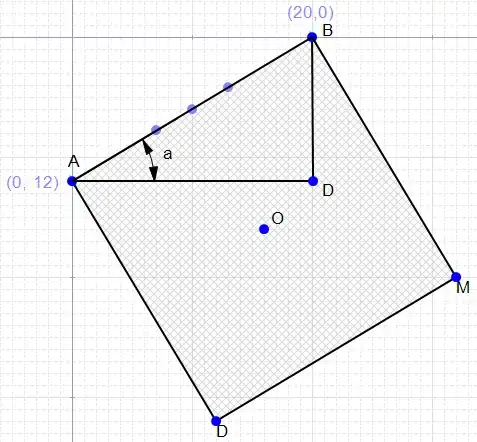

The following is a made-up example in which I cropped part of the region that the extracted text is wrong and add some Chinese characters to reproduce the problem.

When I use the website version, I cannot get the Chinese characters but the remaining characters are correct.

When I use Python to extract the text, I can get the Chinese characters correctly but part of the remaining characters are wrong.

The actual string that I got.

Are the versions of Document AI in the website and API different? How can I get all the characters correctly?

Update:

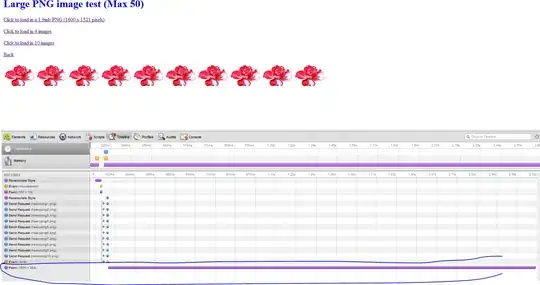

When I print the detected_languages (don't know why for lines = page.lines, the detected_languages for both lines are empty list, need to change to page.blocks or page.paragraphs first) after printing the text, I get the following output.

Code:

from google.cloud import documentai_v1beta3 as documentai

project_id= 'secret-medium-xxxxxx'

location = 'us' # Format is 'us' or 'eu'

processor_id = 'abcdefg123456' # Create processor in Cloud Console

opts = {}

if location == "eu":

opts = {"api_endpoint": "eu-documentai.googleapis.com"}

client = documentai.DocumentProcessorServiceClient(client_options=opts)

def get_text(doc_element: dict, document: dict):

"""

Document AI identifies form fields by their offsets

in document text. This function converts offsets

to text snippets.

"""

response = ""

# If a text segment spans several lines, it will

# be stored in different text segments.

for segment in doc_element.text_anchor.text_segments:

start_index = (

int(segment.start_index)

if segment in doc_element.text_anchor.text_segments

else 0

)

end_index = int(segment.end_index)

response += document.text[start_index:end_index]

return response

def get_lines_of_text(file_path: str, location: str = location, processor_id: str = processor_id, project_id: str = project_id):

# You must set the api_endpoint if you use a location other than 'us', e.g.:

# opts = {}

# if location == "eu":

# opts = {"api_endpoint": "eu-documentai.googleapis.com"}

# The full resource name of the processor, e.g.:

# projects/project-id/locations/location/processor/processor-id

# You must create new processors in the Cloud Console first

name = f"projects/{project_id}/locations/{location}/processors/{processor_id}"

# Read the file into memory

with open(file_path, "rb") as image:

image_content = image.read()

document = {"content": image_content, "mime_type": "application/pdf"}

# Configure the process request

request = {"name": name, "raw_document": document}

result = client.process_document(request=request)

document = result.document

document_pages = document.pages

response_text = []

# For a full list of Document object attributes, please reference this page: https://googleapis.dev/python/documentai/latest/_modules/google/cloud/documentai_v1beta3/types/document.html#Document

# Read the text recognition output from the processor

print("The document contains the following paragraphs:")

for page in document_pages:

lines = page.blocks

for line in lines:

block_text = get_text(line.layout, document)

confidence = line.layout.confidence

response_text.append((block_text[:-1] if block_text[-1:] == '\n' else block_text, confidence))

print(f"Text: {block_text}")

print("Detected Language", line.detected_languages)

return response_text

if __name__ == '__main__':

print(get_lines_of_text('/pdf path'))

It seems the language code is wrong, will this affect the result?