Problem: Wav file loads and is processed by AudioDispatcher, but no sound plays.

First, the permissions:

public void checkPermissions() {

if (PackageManager.PERMISSION_GRANTED != ContextCompat.checkSelfPermission(this.requireContext(), Manifest.permission.RECORD_AUDIO)) {

//When permission is not granted by user, show them message why this permission is needed.

if (ActivityCompat.shouldShowRequestPermissionRationale(this.requireActivity(), Manifest.permission.RECORD_AUDIO)) {

Toast.makeText(this.getContext(), "Please grant permissions to record audio", Toast.LENGTH_LONG).show();

//Give user option to still opt-in the permissions

}

ActivityCompat.requestPermissions(this.requireActivity(), new String[]{Manifest.permission.RECORD_AUDIO}, MY_PERMISSIONS_RECORD_AUDIO);

launchProfile();

}

//If permission is granted, then proceed

else if (ContextCompat.checkSelfPermission(this.requireContext(), Manifest.permission.RECORD_AUDIO) == PackageManager.PERMISSION_GRANTED) {

launchProfile();

}

}

Then the launchProfile() function:

public void launchProfile() {

AudioMethods.test(getActivity().getApplicationContext());

//Other fragments load after this that actually do things with the audio file, but

//I want to get this working before anything else runs.

}

Then the AudioMethods.test function:

public static void test(Context context){

String fileName = "audio-samples/samplefile.wav";

try{

releaseStaticDispatcher(dispatcher);

TarsosDSPAudioFormat tarsosDSPAudioFormat = new TarsosDSPAudioFormat(TarsosDSPAudioFormat.Encoding.PCM_SIGNED,

22050,

16, //based on the screenshot from Audacity, should this be 32?

1,

2,

22050,

ByteOrder.BIG_ENDIAN.equals(ByteOrder.nativeOrder()));

AssetManager assetManager = context.getAssets();

AssetFileDescriptor fileDescriptor = assetManager.openFd(fileName);

InputStream stream = fileDescriptor.createInputStream();

dispatcher = new AudioDispatcher(new UniversalAudioInputStream(stream, tarsosDSPAudioFormat),1024,512);

//Not playing sound for some reason...

final AudioProcessor playerProcessor = new AndroidAudioPlayer(tarsosDSPAudioFormat, 22050, AudioManager.STREAM_MUSIC);

dispatcher.addAudioProcessor(playerProcessor);

dispatcher.run();

Thread audioThread = new Thread(dispatcher, "Test Audio Thread");

audioThread.start();

} catch (Exception e) {

e.printStackTrace();

}

}

Console output. No errors, just the warning:

W/AudioTrack: Use of stream types is deprecated for operations other than volume control

See the documentation of AudioTrack() for what to use instead with android.media.AudioAttributes to qualify your playback use case

D/AudioTrack: stop(38): called with 12288 frames delivered

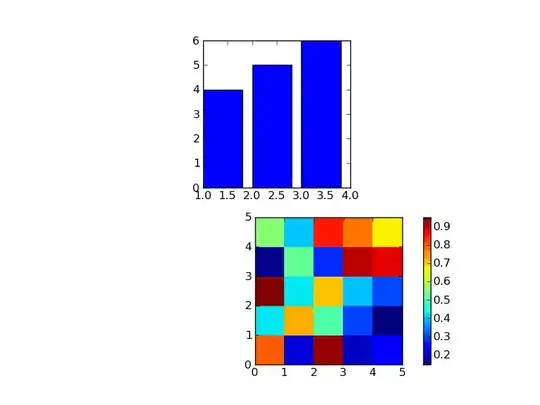

Because the AudioTrack is delivering frames, and there aren't any runtime errors, I'm assuming I'm just missing something dumb by either not having sufficient permissions or I've missed something in setting up my AndroidAudioPlayer. I got the 22050 number by opening the file in Audacity and looking at the stats there:

Any help is appreciated! Thanks :)