Anti-closing preamble: I have read the question "difference between penalty and loss parameters in Sklearn LinearSVC library" but I find the answer there not to be specific enough. Therefore, I’m reformulating the question:

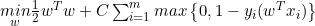

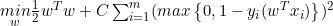

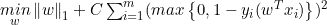

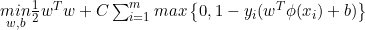

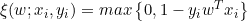

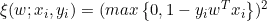

I am familiar with SVM theory and I’m experimenting with LinearSVC class in Python. However, the documentation is not quite clear regarding the meaning of penalty and loss parameters. I recon that loss refers to the penalty for points violating the margin (usually denoted by the Greek letter xi or zeta in the objective function), while penalty is the norm of the vector determining the class boundary, usually denoted by w. Can anyone confirm or deny this?

If my guess is right, then penalty = 'l1' would lead to minimisation of the L1-norm of the vector w, like in LASSO regression. How does this relate to the maximum-margin idea of the SVM? Can anyone point me to a publication regarding this question? In the original paper describing LIBLINEAR I could not find any reference to L1 penalty.

Also, if my guess is right, why doesn't LinearSVC support the combination of penalty='l2' and loss='hinge' (the standard combination in SVC) when dual=False? When trying it, I get the

ValueError: Unsupported set of arguments

,

, ,

,  ,

,