I am going to split this question in 3 parts

First, I've been given this problem, and I don't know where to start, if you have been solving related problem, would you give me some hints and keywords to help me do some more research?

I have done some research on my own

So here is some 2D chest CT scans (sorry due to reputation rule i can't implement images directly)

All photos are in the same angle. So I think I can simply read each photo to a vector of pixels, do some thresh holding to make all black and black-ish pixels going to be a non-colored pixel. Next, I'll create a vector called vector_of_photo of those vectors. Then the index of each vector in vector_of_photo are now the Z-index. Now I can render a 3d photo from those vectors of pixels right?

In the second place, I got trouble understand raycasting algorithm,

I think the idea here is, when I already got a box of pixel then everytime I rotate the box, it cast straight-lines from that angle of the camera to the box, each line found a has-colored pixel going to stop casting and render that pixel (or more specific, copy the pixel to the exactly location on the plane).

Did I understand it correctly?

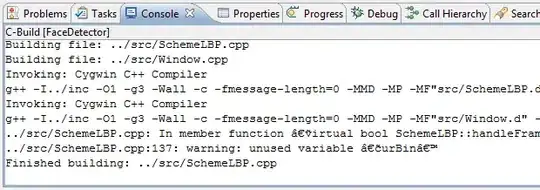

At last, the OPENGL/c++ part is just the option I think I'm going to use to solve this problem. And I'm not pretty sure it is a good idea or not, so give me some more hint about the programming language, library or module I should take a look at.