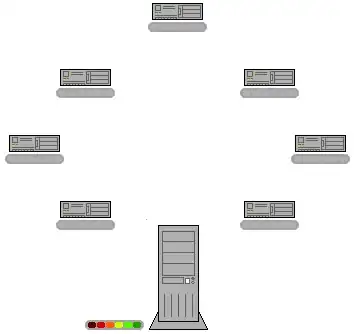

To my understanding, Spark works like this:

- For standard variables, the Driver sends them together with the lambda (or better, closure) to the executors for each task using them.

- For broadcast variables, the Driver sends them to the executors only once, the first time they are used.

Is there any advantage to use a broadcast variable instead of a standard variable when we know it is used only once, so there would be only one transfer even in case of a standard variable?

Example (Java):

public class SparkDriver {

public static void main(String[] args) {

String inputPath = args[0];

String outputPath = args[1];

Map<String,String> dictionary = new HashMap<>();

dictionary.put("J", "Java");

dictionary.put("S", "Spark");

SparkConf conf = new SparkConf()

.setAppName("Try BV")

.setMaster("local");

try (JavaSparkContext context = new JavaSparkContext(conf)) {

final Broadcast<Map<String,String>> dictionaryBroadcast = context.broadcast(dictionary);

context.textFile(inputPath)

.map(line -> { // just one transformation using BV

Map<String,String> d = dictionaryBroadcast.value();

String[] words = line.split(" ");

StringBuffer sb = new StringBuffer();

for (String w : words)

sb.append(d.get(w)).append(" ");

return sb.toString();

})

.saveAsTextFile(outputPath); // just one action!

}

}

}