Here is another answer, this one using scipy.spatial.KDTree. It is particularly efficient in the case where there aren't too many overlaps between the bounding boxes, and their "radius" distribution isn't too "wild" (radius.max() / np.median(radius) not too large, e.g. between 1 and 2).

It works both for p-norm 1 (Manhattan) or 2 (Euclidean), although in practice p=2 is faster, because on average each point see fewer candidates (the sum area of the circles is less than that of the diamonds).

Why is it fast? KD-trees are well suited for this kind of problems. They partition the space by splitting it at each node along a dimension and a mid-point. Once they are built, querying them is fast because of the divide-and-conquer approach they offer.

The key function is the following:

def find(kd_boxes, kd_points, bb, r, p):

# bb: m bounding boxes, in the form [x0,x1,y0,y1]

# find one bb for each point (the first one), or m if none

xy = kd_points.data

found = np.full(len(xy), len(bb), dtype=int)

cand = pad_jagged(kd_points.query_ball_tree(kd_boxes, r * 1.001, p=p))

remaining = None

for c in range(cand.shape[1]):

remaining = np.nonzero(cand[:, c] >= 0)[0] if remaining is None else remaining[np.nonzero(cand[remaining, 1] >= 0)[0]]

if remaining.size == 0:

break

i = remaining

j = cand[i, c]

ok = (bb[j, 0::2] <= xy[i]).all(1) & (xy[i] <= bb[j, 1::2]).all(1)

k = np.nonzero(ok)[0]

found[i[k]] = j[k]

remaining = remaining[~ok]

return found

Before calling this function, we compute a "radius" for each bounding box that is half of the p-norm of the diagonal. We then use the overall max radius r as a maximum distance for the KDTree queries. The KDTree query (kd_points.query_ball_tree()) efficiently sifters through all the bounding box centers, and finds in one call all the ones that are within the radius. This is a superset of the actual matches, but is fast and reduces substantially the search space. After that, it is a matter of filtering the points that actually are in the bounding box, and keeping track of the (first) matching bounding box for each point.

As an optimization (not implemented here), we could consider the the sizes of the bounding boxes (the radius array). If judged too disparate, then the bounding boxes could be split in two groups (e.g. around the np.median(radius)), and the same search could be done for each half (and again, recursively, if necessary).

For the OP example, after a bit of preparation to get centers, bounding boxes, and radius in a form easier to use (all Numpy arrays), that query returns:

# preparation

xy = df1[['longitude', 'latitude']].values

bb = df2[['minlong', 'maxlong', 'minlat', 'maxlat']].values

centers = bb @ np.array([[1,1,0,0], [0,0,1,1]]).T / 2

radius = np.linalg.norm(bb @ np.array([[-1,1,0,0], [0,0,-1,1]]).T, axis=1) / 2

kd_boxes = KDTree(centers)

kd_points = KDTree(xy)

# query

>>> kd_points.query_ball_tree(kd_boxes, radius.max() * 1.001)

[[0], [2], [2], [], [], [], [1], [], [0], [0]]

A similar result (in this example, identical) can be obtained with Manhattan norm instead:

radius = bb @ np.array([-1,1,-1,1]) / 2

>>> kd_points.query_ball_tree(kd_boxes, radius.max() * 1.001, p=1)

[[0], [2], [2], [], [], [], [1], [], [0], [0]]

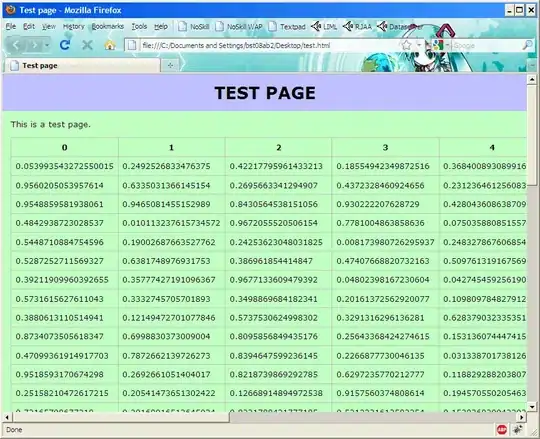

Both these solutions are plotted in the following figure, where all candidate bounding boxes are highlighted, along with the actual area within distance r of their center:

Note how, in both cases, point #2 is wrongly included in bbox #2. This first step, using KD-Trees, is just a filtering one. The next step is to check that, for each point, the candidates actually contain the point. With that filtering (the expression for the ok array), the solution is:

Let's see what happens if we have more points and bounding boxes, possibly with overlaps:

def gen_example(n, m, seed=None):

# let's make a more complex problem, with overlaps

if seed is not None:

np.random.seed(seed)

df1 = pd.DataFrame({

'latitude': np.random.uniform(0, 1 + 1 / np.sqrt(m), size=n),

'longitude': np.random.uniform(0, 1 + 1 / np.sqrt(m), size=n),

})

df2 = pd.DataFrame({

'minlat': np.random.uniform(size=m),

'maxlat': np.random.uniform(.5, 1, size=m) / np.sqrt(m),

'minlong': np.random.uniform(size=m),

'maxlong': np.random.uniform(.5, 1, size=m) / np.sqrt(m),

'STRING': [f'b_{i}' for i in range(m)],

})

df2['maxlat'] += df2['minlat']

df2['maxlong'] += df2['minlong']

return df1, df2

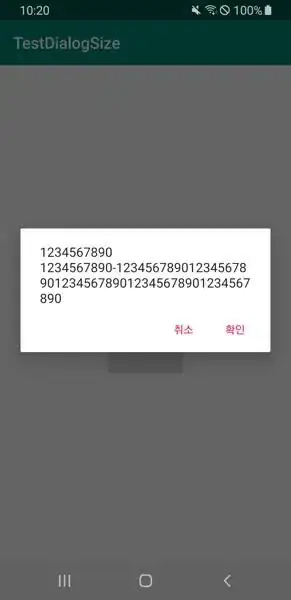

For df1, df2 = gen_example(32, 12, 0), the corresponding pictures are:

and the candidates (the result of the query, here for p=2) are:

>>> kd_cand = kd_points.query_ball_tree(kd_boxes, r * 1.001, p=2)

[[], [], [9], [], [], [10], [], [], [], [], [], [9], [10], [], [11], [11], [], [2, 6], [8], [8], [], [4], [7, 9], [4], [3, 5], [2, 4], [0], [], [7, 9], [7, 9], [0, 3, 5], [4]]

Since this is a jagged array, we turn it into a rectangular array with fill_value=-1:

def pad_jagged(a, fill_value=-1):

lens = np.array([len(r) for r in a])

v = np.array([e for r in a for e in r])

w = np.max(lens)

return np.insert(

v,

np.repeat(np.cumsum(lens), w - lens),

fill_value

).reshape(-1, w)

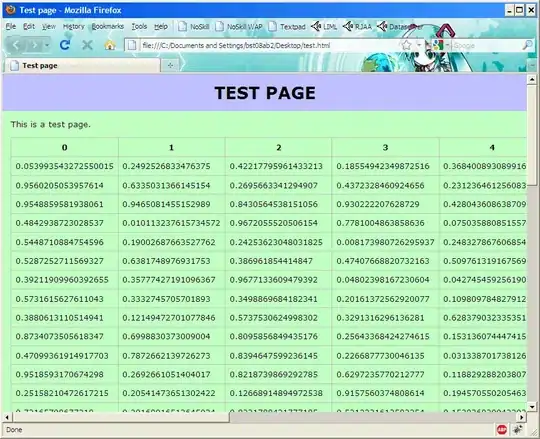

This gives, for the padded array above:

>>> pad_jagged(kd_cand)

[[-1 -1 -1]

[ 8 -1 -1]

[ 9 -1 -1]

...

[ 7 9 -1]

[ 0 3 5]

[ 4 -1 -1]]

Now, we iterate through the columns of this array, but in a greedy way that removes any successful matches from previous iterations (that's the reason for remaining).

Other functions to handle preprocessing etc. are:

def find_regions(xy, bb, p=2):

# xy: numpy array (n, 2)

# bb: numpy array (m, 4): the four columns are xmin, xmax, ymin, ymax

# for each point in xy, return the index of a region that contains it, or -1

centers = bb @ np.array([[1,1,0,0], [0,0,1,1]]).T / 2

assert p in {1,2}, "only 1- or 2-norm supported"

radius = bb @ np.array([-1,1,-1,1]) / 2 if p == 1 else np.linalg.norm(bb @ np.array([[-1,1,0,0], [0,0,-1,1]]).T, axis=1) / 2

kd_boxes = KDTree(centers)

kd_points = KDTree(xy)

return find(kd_boxes, kd_points, bb, radius.max(), p=p)

def find_region_label(df1, df2, nomatch=None, p=2):

found = find_regions(

df1[['longitude', 'latitude']].values,

df2[['minlong', 'maxlong', 'minlat', 'maxlat']].values,

p=p,

)

lbl = np.r_[df2['STRING'].values, [nomatch]]

return lbl[found]

On the OP example:

>>> df1.assign(LABEL=find_region_label(df1, df2))

latitude longitude LABEL

0 1.3 2.7 AAA

1 3.5 3.6 CCC

2 2.8 3.0 None

3 9.7 1.9 None

4 6.2 5.7 None

5 1.7 3.4 None

6 3.5 1.4 BBB

7 2.7 6.6 None

8 1.7 2.7 AAA

9 1.3 1.3 AAA

Speed test

And now for a speed test where both number of points n and number of bounding boxes m is larger:

df1, df2 = gen_example(100_000, 10_000, 0)

Speed comparison with @norok2's numba solution (slightly adapted to follow the OP's column names):

a = %timeit -o find_region_label(df1, df2)

# 222 ms ± 904 µs per loop (mean ± std. dev. of 7 runs, 1 loop each)

b = %timeit -o locate_in_regions_nb(df1, df2)

# 5.38 s ± 40.6 ms per loop (mean ± std. dev. of 7 runs, 1 loop each)

>>> b.average / a.average

24.255

That's a 24x speedup on this data.

Verify that we get the same solution:

sa = find_region_label(df1, df2)

sb = locate_in_regions_nb(df1, df2)

>>> (sa == sb).all()

True