I am trying to forecast a time series in Python by using auto_arima and adding Fourier terms as exogenous features. The data come from kaggle's Store item demand forecasting challenge. It consists of a long format time series for 10 stores and 50 items resulting in 500 time series stacked on top of each other. The specificity of this time series is that it has daily data with weekly and annual seasonalities.

In order to capture these two levels of seasonality I first used TBATS as recommended by Rob J Hyndman in Forecasting with daily data which worked pretty well actually.

I also followed this medium article posted by the creator of TBATS python library who compared it with SARIMAX + Fourier terms (also recommended by Hyndman).

But now, when I tried to use the second approach with pmdarima's auto_arima and Fourier terms as exogenous features, I get unexpected results.

In the following code, I only used the train.csv file that I split into train and test data (last year used for forecasting) and set the maximum order of Fourier terms K = 2.

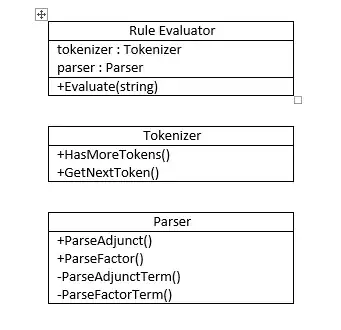

My problem is that I obtain a smoothed forecast (see Image below) that do not seem to capture the weekly seasonality which is different from the result at the end of this article. Is there something wrong with my code ?

Complete code :

# imports

import pandas as pd

from pmdarima.preprocessing import FourierFeaturizer

from pmdarima import auto_arima

import matplotlib.pyplot as plt

# Upload the data that consist in a long format time series of multiple TS stacked on top of each other

# There are 10 (stores) * 50 (items) = 500 time series

train_data = pd.read_csv('train.csv', index_col='date', parse_dates=True)

# Select only one time series for store 1 and item 1 for the purpose of the example

train_data = train_data.query('store == 1 and item == 1').sales

# Prepare the fourier terms to add as exogenous features to auto_arima

# Annual seasonality covered by fourier terms

four_terms = FourierFeaturizer(365.25, 2)

y_prime, exog = four_terms.fit_transform(train_data)

exog['date'] = y_prime.index # is exactly the same as manual calculation in the above cells

exog = exog.set_index(exog['date'])

exog.index.freq = 'D'

exog = exog.drop(columns=['date'])

# Split the time series as well as exogenous features data into train and test splits

y_to_train = y_prime.iloc[:(len(y_prime)-365)]

y_to_test = y_prime.iloc[(len(y_prime)-365):] # last year for testing

exog_to_train = exog.iloc[:(len(exog)-365)]

exog_to_test = exog.iloc[(len(exog)-365):]

# Fit model

# Weekly seasonality covered by SARIMAX

arima_exog_model = auto_arima(y=y_to_train, exogenous=exog_to_train, seasonal=True, m=7)

# Forecast

y_arima_exog_forecast = arima_exog_model.predict(n_periods=365, exogenous=exog_to_test)

y_arima_exog_forecast = pd.DataFrame(y_arima_exog_forecast , index = pd.date_range(start='2017-01-01', end= '2017-12-31'))

# Plots

plt.plot(y_to_test, label='Actual data')

plt.plot(y_arima_exog_forecast, label='Forecast')

plt.legend()

Thanks in advance for your answers !