I have created a reproducible example based on your question and have used Pandas built-in groupby(), resample() and arithmetic functions to obtain the desired output.

import pandas as pd

# Generate columns as per example

CustomerID = [152] * 7 + [159] * 6 + [162] * 3

ProductID = ['ABC'] * len(CustomerID)

TankID = ['xyz'] * len(CustomerID)

ConsumptionDateTime = (

pd.to_datetime(['2018-09-11 13:30:00',

'2018-09-11 14:30:00',

'2018-09-11 15:30:00',

'2018-09-11 21:30:00',

'2018-09-12 00:30:00',

'2018-09-12 18:30:00',

'2018-09-11 19:30:00'])

)

ConsumptionDateTime = (

ConsumptionDateTime

.append(

pd.date_range(start='2018-09-11 20:30:00',

end='2018-09-12 04:30:00',

freq='H')

)

)

ConsumptionEndTime = (

pd.to_datetime(['2018-09-11 14:30:00',

'2018-09-11 15:30:00',

'2018-09-11 21:30:00',

'2018-09-11 23:30:00',

'2018-09-12 18:30:00',

'2018-09-12 19:30:00',

'2018-09-11 22:30:00'])

)

ConsumptionEndTime = (

ConsumptionEndTime

.append(

ConsumptionDateTime[7:] + pd.Timedelta(1, unit='H')

)

)

ConsumptionRate = [0, 0, 25, 4, 29, 1, 0, 0, 4, 7, 5, 99, 41, 4, 0, 0]

# Create Pandas DataFrame

data = pd.DataFrame({'CustomerID': CustomerID,

'ProductID': ProductID,

'TankID': TankID,

'ConsumptionDateTime': ConsumptionDateTime,

'ConsumptionEndTime': ConsumptionEndTime,

'ConsumptionRate': ConsumptionRate})

# Set DateTimeIndex to enable resampling

data.set_index('ConsumptionDateTime', drop=False, inplace=True)

# Group data

grouped_data = data.groupby(['CustomerID', 'ProductID', 'TankID']).resample('D')

# Apply calculations and concatenate results in a single output

final_data = pd.concat([grouped_data['ConsumptionEndTime'].min().dt.date,

grouped_data['ConsumptionRate'].sum()],

axis=1)

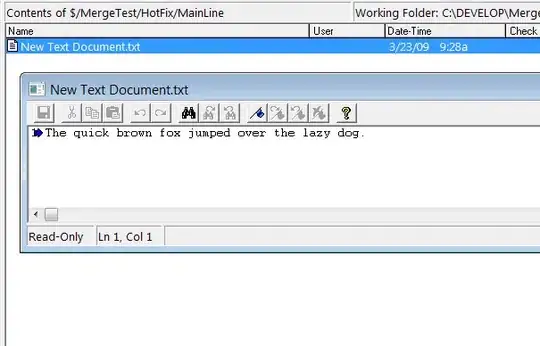

Note that I have set the ConsumptionDateTime as the DataFrame index before resampling the data from ~hourly to daily.

The resulting DataFrame looks like the following:

ConsumptionEndTime ConsumptionRate

CustomerID ProductID TankID ConsumptionDateTime

152 ABC xyz 2018-09-11 2018-09-11 29

2018-09-12 2018-09-12 30

159 ABC xyz 2018-09-11 2018-09-11 16

2018-09-12 2018-09-12 140

162 ABC xyz 2018-09-12 2018-09-12 4

I would like to sum the values of only Consumption rate column on a daily basis i.e convert hourly data to daily data.

I would like to sum the values of only Consumption rate column on a daily basis i.e convert hourly data to daily data. How to do this using Python?

How to do this using Python?