My team and I have a lot of small services that work with each other to get the job done. We came up with some internal tooling and some some solutions that work for us, however we are always trying to improve.

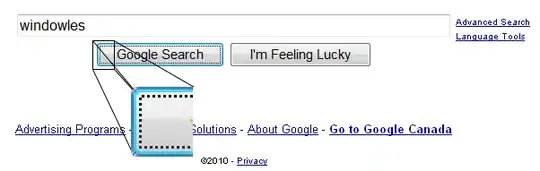

We have created a docker setup where we do something like this:

That is, we have a private network in place where the services can call each other by name and that works.

In this situation if service-a call service-b like

That is, we have a private network in place where the services can call each other by name and that works.

In this situation if service-a call service-b like http://localhost:6002 it wouldn't work.

We have a scenario where we want to work on a module and run the rest on docker. So we could run the service-a directly out of our IDE for example and leave service-b and service-c on docker. The last service (service-c) we would than reference as localhost:6003 from the host network.

This works fine!

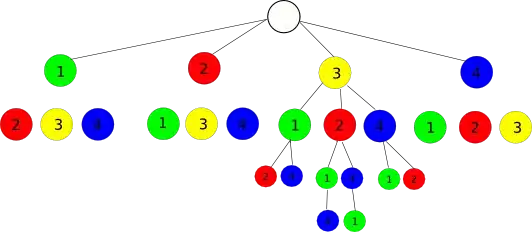

However things can get "out of hand" the more we go down the line. In the example we only have 3 services but our longest chain is like 6 services. Supposing I want to work on the one before the last, I have to start all services that came before in order to simulate a complete chain. (In most of the cases we work through APIs, which render the point mute, but bear with me). Something like this:

The QUESTION

Is there a way to allow a situation such as this one?

In order to run part of the services as docker containers and maybe one service from my IDE for example?

In order to run part of the services as docker containers and maybe one service from my IDE for example?

Or would for that be necessary that I put all of them on the host network for them all to be able to call each other through localhost?

I appreciate any help!