With openCV, when I save my processed image in .png format with openCV, I get differents colors than when I display it on screen.

(code is at the end of message)

Here's what I get by displaying the image on screen with cv2.imshow('after', after) (that is what I want):

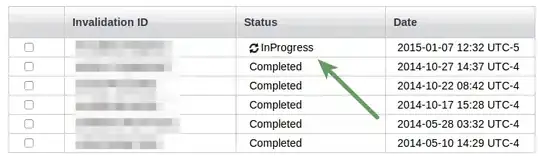

Here's what I get by saving the same image in .png with cv2.imwrite("debug.png", after) (that is not what I want):

The box and center seems to be transparent, because when I open the .png on vs code, they are blackish like vs code background (see picture above), but when I open it in windows Photos software, they are white (see picture below)

Here's my code that is kind of a fusion between this post and this post. It draws green boxes around differences between two images and draws the center of each difference :

def find_diff(before, after):

before = np.array(before)

after = np.array(after)

# Convert images to grayscale

before_gray = cv2.cvtColor(before, cv2.COLOR_BGR2GRAY)

after_gray = cv2.cvtColor(after, cv2.COLOR_BGR2GRAY)

# Compute SSIM between two images

(score, diff) = structural_similarity(before_gray, after_gray, full=True)

diff = (diff * 255).astype("uint8")

# Threshold the difference image, followed by finding contours to

# obtain the regions of the two input images that differ

thresh = cv2.threshold(diff, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1]

contours = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

contours = contours[0] if len(contours) == 2 else contours[1]

centers = []

for c in contours:

area = cv2.contourArea(c)

if area > 40:

# Find centroid

M = cv2.moments(c)

cX = int(M["m10"] / M["m00"])

cY = int(M["m01"] / M["m00"])

centers.append((cY,cY))

cv2.circle(after, (cX, cY), 2, (320, 159, 22), -1)

#draw boxes on diffs

x, y, w, h = cv2.boundingRect(c)

cv2.rectangle(after, (x, y), (x + w, y + h), (36, 255, 12), 2)

cv2.imwrite("debug.png", after)

cv2.imshow('after', after)

cv2.waitKey(0)

return centers

before = mss.mss().grab((466, 325, 1461, 783))

sleep(3)

after = mss.mss().grab((466, 325, 1461, 783))

relative_diffs = find_diff(before, after)

I can't figure out at which step it messes up. I do give green and blue colors when calling .circle() and .rectangle().