You can do it using the 'HTTP plugin', but you will only receive a text response inside the HTTP body with the file content.

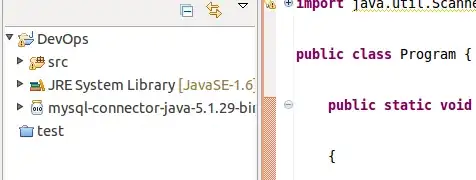

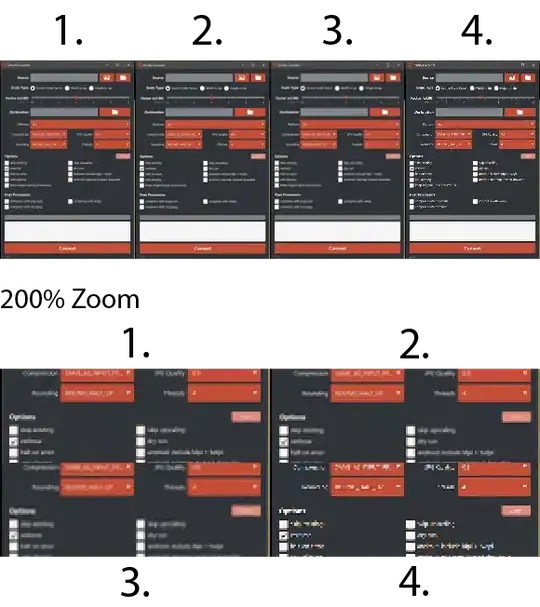

In the Data Fusion, you should create pipeline (you've already did it, but you can try newer version)

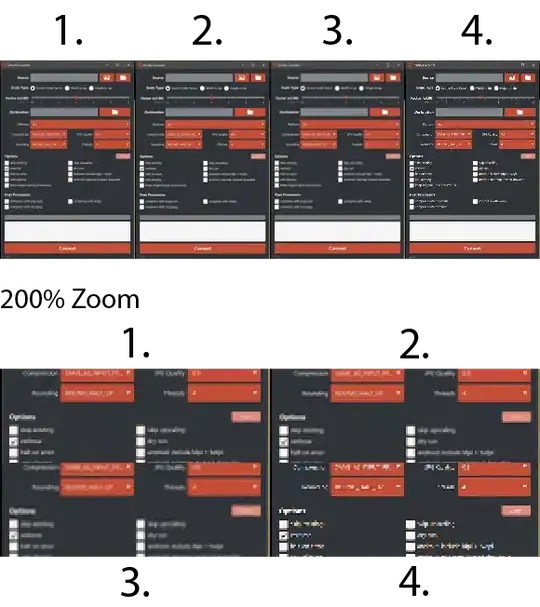

GCS Configuration:

For an endpoint, I’ve created a VM in Google Compute Engine.

HTTP Plugin Configuration:

Before running sink you should install some kind of HTTP service, for example Tornado Web Server

$ sudo apt install python

$ sudo apt install python-pip

$ pip install tornado

Create script like below to observe http requests:

#!/usr/bin/env python

import tornado.ioloop

import tornado.web

import pprint

class MyDumpHandler(tornado.web.RequestHandler):

def post(self):

pprint.pprint(self.request)

pprint.pprint(self.request.body)

if __name__ == "__main__":

tornado.web.Application([(r"/.*", MyDumpHandler),]).listen(8080)

tornado.ioloop.IOLoop.instance().start()

and run this script using python echo.py or python3 echo.py depending on what you will have on your VM with Web Server.

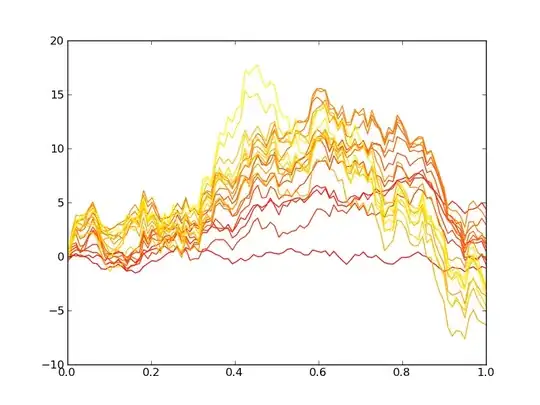

Below Response:

CSV file contains only 2 rows for test purpose: