The error 403 Provided scope(s) are not authorized, shows that the service account you're using to copy doesn't have permission to write an object to the kukroid-gcp bucket.

And based on your screenshot, you are using the Compute Engine default service account and by default it does not have access to the bucket. To make sure your service account has the correct scope you can use curl to query the GCE metadata server:

curl -H 'Metadata-Flavor: Google' "http://metadata.google.internal/computeMetadata/v1/instance/service-accounts/default/scopes"

You can add the result of that command on your post as an additional information. If there are no scopes that gives you access to storage bucket, then you will need to add the necessary scopes. You can read about scopes here. And you can see the full list of available scopes here

Another workaround is to create a new service account instead of using the default. To do this, here is the step by step process without deleting the existing VM:

- Stop the VM instance

- Create a new service account IAM & Admin > Service Accounts > Add service account

- Create a new service account with the Cloud Storage Admin role

- Create private key for this service account

- After creating the new service account, go to vm and click on it's name > then click on edit

- Now, in the editing mode, scroll down to the service-accounts section and select the new service account.

- Start your instance, then try to copy the file again

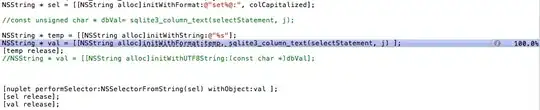

My Bucket Details

My Bucket Details  Can anyone suggest what am I missing? how to fix this?

Can anyone suggest what am I missing? how to fix this?