I'm trying to build regression neural network. My problem is that accuracy metric during training from beginning remains at value 0.0027. I tried changing number of hidden layers and neurons, learning rate, optimizer, loss function, activation function of hidden layers and batch size. Unfortunately nothing seems to work. I even tried this without scaling output data (and without output activation function) and accuracy wasn't even bigger than zero (it was 0.0000e-4 or something like this) and loss was in fact decreasing but stuck at loss=56. So I suspect it has something to do with values scaling, but I don't know how to tackle this.

For purpose of building regression neural network I created my own fictional dataset. It's correlation between group (blue and pink), age, iq and results of some non-existant exam.

After creating dataset I created neural network using TensorFlow. This is my code:

import pandas as pd

import numpy as np

from sklearn.preprocessing import StandardScaler

from tensorflow import keras

def preprocess_dataframe(dataframe):

for index, row in dataframe.iterrows():

if row['group'] == 'blue':

dataframe.at[index, 'group'] = 0

else:

dataframe.at[index, 'group'] = 1

data = dataframe.to_numpy()

data_X = np.delete(data, 3, 1)

data_y = data[:, 3]

data_y = np.asarray(data_y).astype(np.float32)

data_y = data_y / 100

X_train, X_val, X_test = data_X[:7000], data_X[7000:8500], data_X[8500:]

y_train, y_val, y_test = data_y[:7000], data_y[7000:8500], data_y[8500:]

scaler = StandardScaler()

X_train_scaled = scaler.fit_transform(X_train)

X_val_scaled = scaler.transform(X_val)

X_test_scaled = scaler.transform(X_test)

return X_train_scaled, X_val_scaled, X_test_scaled, y_train, y_val, y_test

df = pd.read_csv('data.csv')

X_train, X_val, X_test, y_train, y_val, y_test = preprocess_dataframe(df)

model = keras.models.Sequential([

keras.layers.InputLayer(input_shape=[3]),

keras.layers.Dense(30, activation='relu'),

keras.layers.Dense(1, activation='sigmoid')

])

model.compile(

loss='mse',

optimizer=keras.optimizers.SGD(learning_rate=0.01),

metrics=['accuracy']

)

history = model.fit(

X_train,

y_train,

epochs=1000,

validation_data=(X_val, y_val)

)

model.evaluate(X_test, y_test)

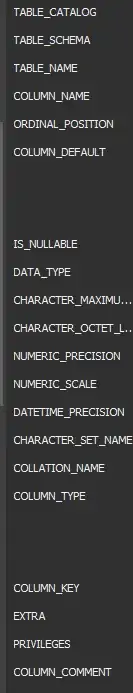

And loaded dataframe looks like this:

group age iq result

0 blue 77 114.600366 47

1 blue 88 105.094111 52

2 pink 18 143.150909 39

3 blue 76 94.557100 34

4 pink 21 149.144244 40

... ... ... ... ...

9995 blue 68 179.515230 77

9996 blue 28 194.699500 74

9997 pink 20 166.325281 50

9998 blue 49 89.103387 28

9999 pink 36 185.689921 73