from scipy.optimize import curve_fit

def func(x, a, b):

return a * x + b

x = [0, 1, 2, 3]

y = [160, 317, 3302, 16002]

yerr = [0.0791, 0.0562, 0.0174, 0.0079]

curve_fit(func, x, y, sigma=yerr)

I am trying to do a weighed least square linear regression with the code above using scipy's curve_fit function. From this I need to get the slope and the error of the fit. I think I figured out how to get the slope using the below code, but I don't know how to extract the error of the fit.

# Code to get the slope (correct me if i'm wrong!!)

popt = curve_fit(func, x, y, sigma=yerr)

slope = popt[0]

Thank you!

======================================

Edit: I have been doing some research and I think I might have figured everything out for myself. I will do my best to explain it!

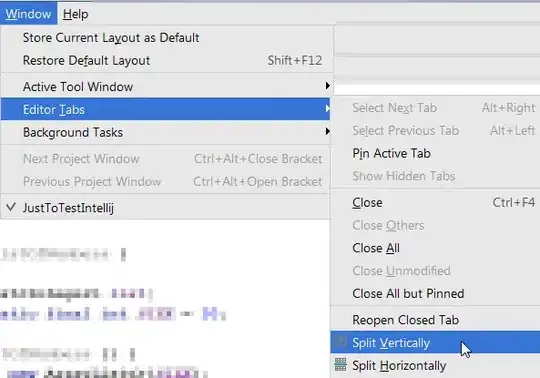

From the image below, I provide this function to curve_fit with a and b being my parameters corresponding to slope and intercept respectively.

When you use curve fit it returns a 1D array, popt, and a 2D array pcov.

popt contains the optimization of my provided parameters, so in this case, popt[0] is slope (green) and popt[1] is intercept (red).

Next, the pcov matrix represents covariance. What I'm mainly looking for here are the values on the diagonal, as these correspond to the variance of each parameter. Again I color coded these so that slope error is pcov[0,0] (green) and intercept error is pcov[1,1] (red).

From this I was able to get the slope of my line and its error. I hope this explanation can be helpful to someone else!!