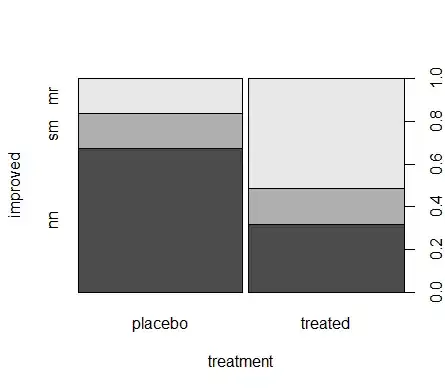

I realize there is a allow_pickle=False option in numpys load method but I didn't feel comfortable with unpickling/using data from the internet so I used the small image. After removing the coordinate system and stuff I had

I define two helper methods. One to later rotate the image taken from an other stack overflow thread. See link below. And one to get a mask being one at a specified color and zero otherwise.

import numpy as np

import matplotlib.pyplot as plt

import sympy

import cv2

import functools

color = arr[150,50]

def similar_to_boundary_color(arr, color=tuple(color)):

mask = functools.reduce(np.logical_and, [np.isclose(arr[:,:,i], color[i]) for i in range(4)])

return mask

#https://stackoverflow.com/a/9042907/2640045

def rotate_image(image, angle):

image_center = tuple(np.array(image.shape[1::-1]) / 2)

rot_mat = cv2.getRotationMatrix2D(image_center, angle, 1.0)

result = cv2.warpAffine(image, rot_mat, image.shape[1::-1], flags=cv2.INTER_LINEAR)

return result

Next I calculate the angle to rotate about. I do that by finding the lowest pixel at width 50 and 300. I picked those since they are far enough from the boundary to not be effected by missing corners etc..

i,j = np.where(~similar_to_boundary_color(arr))

slope = (max(i[j == 50])-max(i[j == 300]))/(50-300)

angle = np.arctan(slope)

arr = rotate_image(arr, np.rad2deg(angle))

plt.imshow(arr)

.

.

One way of doing the cropping is the following. You calculate the mid in height and width. Then you take two slices around the mid say 20 pixels in one direction and to until the mid in the other one. The biggest/smallest index where the pixel is white/background colored is a reasonable point to cut.

i,j = np.where(~(~similar_to_boundary_color(arr) & ~similar_to_boundary_color(arr, (0,0,0,0))))

imid, jmid = np.array(arr.shape)[:2]/2

imin = max(i[(i < imid) & (jmid - 10 < j) & (j < jmid + 10)])

imax = min(i[(i > imid) & (jmid - 10 < j) & (j < jmid + 10)])

jmax = min(j[(j > jmid) & (imid - 10 < i) & (i < imid + 10)])

jmin = max(j[(j < jmid) & (imid - 10 < i) & (i < imid + 10)])

arr = arr[imin:imax,jmin:jmax]

plt.imshow(arr)

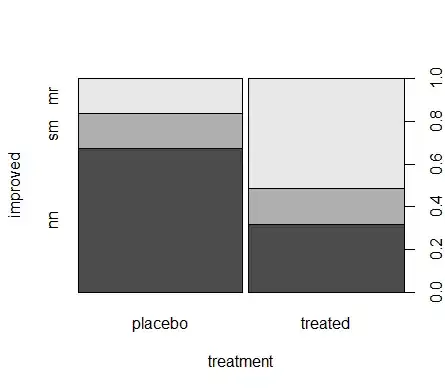

and the result is: