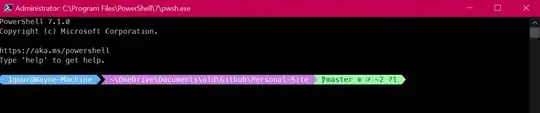

UPDATES: I have updated the function to provide wide timeframe to avoid expiration. I also am including screenshot of my account settings which I updated to enable public access. Also including current console results. Same result on the page that the image won't open.

async function sasKey(mediaLoc) {

try {

console.log('starting sasKey in bloboperations mediaLoc:', mediaLoc)

console.log('process.env.BLOB_CONTAINER is: ', process.env.BLOB_CONTAINER)

var storage = require("@azure/storage-blob")

const accountname = process.env.BLOB_ACCT_NAME;

console.log('accountname is: ', accountname)

const key = process.env.BLOB_KEY;

const cerds = new storage.StorageSharedKeyCredential(accountname,key);

const blobServiceClient = new storage.BlobServiceClient(`https://${accountname}.blob.core.windows.net`,cerds);

const containerName=process.env.BLOB_CONTAINER;

const client =blobServiceClient.getContainerClient(containerName)

const blobName=mediaLoc;

const blobClient = client.getBlobClient(blobName);

const checkDate = new Date();

const startDate = new Date(checkDate.valueOf() - 1200000);

const endDate = new Date(checkDate.valueOf() + 3600000);

console.log('checkDate, startDate, endDate: ', checkDate, startDate, endDate)

const blobSAS = storage.generateBlobSASQueryParameters({

containerName,

blobName,

permissions: storage.BlobSASPermissions.parse("racwd"),

startsOn: startDate,

expiresOn: endDate

},

cerds

).toString();

console.log( 'blobSAS is: ', blobSAS)

// const sasUrl= blobClient.url+"?"+encodeURIComponent(blobSAS);

const sasUrl = 'https://' + accountname + '/' + containerName + '/' + blobName + '?' + blobSAS

console.log('sasURL is: ', sasUrl);

return sasUrl

}

catch (error) {

console.log(error);

}

}

I am trying to get a valid SAS URI from my Azure storage blob container via a node.js function. I'm using the @azure/storage-blob library. I am getting a return from Azure, but the browser is saying it's not authorized. I've quadruple checked that my account and key are correct. And those settings are working to upload the media to the container.

I'm not sure how to even troubleshoot since there aren't any error messages coming back to the node api. The same code returns a URI that works from another (dev) container. However, that container allows public access right now. So it makes sense that you could access the blob from that one, no matter what. Any suggestions on how I can troubleshoot this please?

The error from accessing the blob generated in the console:

5x6gyfbc5eo31fdf38f7fdc51ea1632857020560.png:1 GET https://**********.blob.core.windows.net/prod/5x6gyfbc5eo31fdf38f7fdc51ea1632857020560.png?sv%3D2020-06-12%26st%3D2021-09-28T19%253A23%253A41Z%26se%3D2021-09-28T19%253A25%253A07Z%26sr%3Db%26sp%3Dracwd%26sig%3Du6Naiikn%252B825koPikqRGmiOoKMJZ5L3mfcR%252FTCT3Uyk%253D 409 (Public access is not permitted on this storage account.)

The code that generates the URI:

async function sasKey(mediaLoc) {

try {

var storage = require("@azure/storage-blob")

const accountname = process.env.BLOB_ACCT_NAME;

const key = process.env.BLOB_KEY;

const cerds = new storage.StorageSharedKeyCredential(accountname,key);

const blobServiceClient = new storage.BlobServiceClient(`https://${accountname}.blob.core.windows.net`,cerds);

const containerName=process.env.BLOB_CONTAINER;

const client =blobServiceClient.getContainerClient(containerName)

const blobName=mediaLoc;

const blobClient = client.getBlobClient(blobName);

const blobSAS = storage.generateBlobSASQueryParameters({

containerName,

blobName,

permissions: storage.BlobSASPermissions.parse("racwd"),

startsOn: new Date(),

expiresOn: new Date(new Date().valueOf() + 86400)

},

cerds).toString();

const sasUrl= blobClient.url+"?"+encodeURIComponent(blobSAS);

console.log('blobOperations.js returns blobSAS URL as: ', sasUrl);

console.log( 'blobSAS is: ', blobSAS)

return sasUrl

}

catch (error) {

console.log(error);

}

}