I'm looking for an efficient way to perform the following task in Python with the help of multithreading to reduce the execution time as little as possible.

The system has an array of dictionaries used for storing types and versions and a separate list of codes.

[

{

"id":166,

"name":"CCV6-TARGET",

"versions":{

"installed":{

"id":983,

"version":"8.0",

"results":[]

},

"installing":{

"id":1369,

"version":"10.0",

"results":[]

}

}

},

{

"id":165,

"name":"CCV7-TARGET",

"versions":{

"installed":{

"id":984,

"version":"7.0",

"results":[]

},

"installing":{

"id":1370,

"version":"9.0"

"results":[]

}

}

}

]

Codes are stored as a matrix instead:

codes = [

["a-1", "a-2", "a-3", "a-N"],

["b-1", "b-2", "b-3", "b-N"],

["c-1", "c-2", "c-3", "c-N"],

["d-1", "d-2", "d-3", "d-N"],

]

During a request, each row is reduced into a single combined string and passed as a parameter called codes.

I need to loop through each type and version, and for each version, send N HTTP requests (one per row) to an API server to find out which code is in the current version.

For example, for the first version, we send four requests for the second version, the other four requests, and so on for each version.

https://api.server?version_id=983&codes=a-1 or a-2 or a-3 or a-N

https://api.server?version_id=983&codes=b-1 or b-2 or b-3 or b-N

https://api.server?version_id=983&codes=c-1 or c-2 or c-3 or c-N

https://api.server?version_id=983&codes=d-1 or d-2 or d-3 or d-N

The results of each query should be saved into the corresponding results array of each dedicated version.

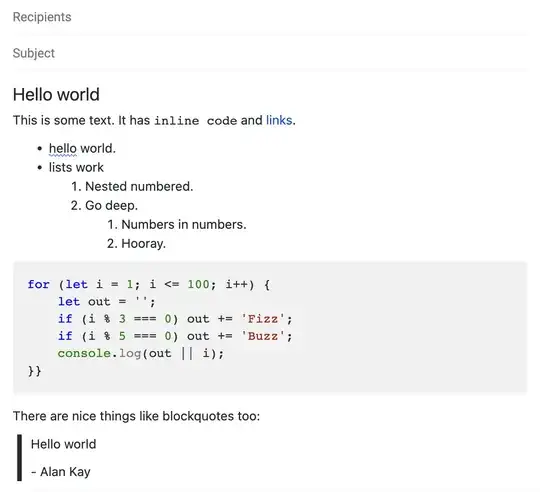

I was thinking of starting a new process per type and for each version to spawn one thread which, in turn, generates N sub-threads for handling the requests. Does this make sense? Something like this:

is this over engineered? Any help will be appreciated

Thanks