I've been trying to make an endpoint using the azure ML designer. The basic idea is to download a trained model from my Azure subscription blob storage and use it to make predictions on new data. The problem I'm facing is that whenever I download the pickle file in the script and try to load it, I always get an error saying that the path or directory doesn't exist. Is there anyway to solve this or is it a limitation of the compute cluster attached to the pipeline?

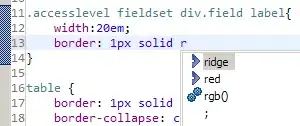

Here are some screenshots for the code snippet I'm trying to run and the error returned.