Summary: Code and configuration known to show up in NoSQL Workbench when using DynamoDB Local mysteriously don't work with LocalStack: though the connection works, the tables no longer show in NoSQL Workbench (but continue to show up when using the aws-cli).

I created a table in DynamoDB Local running in Docker that worked in NoSQL Workbench. I wrote code to seed that database, and it all worked and showed up in NoSQL Workbench.

I switched to LocalStack (so I can interact with other AWS services locally). I was able to create a table with Terraform and can seed it with my code (using the configuration given here). Using the aws-cli, I can see the table, etc.

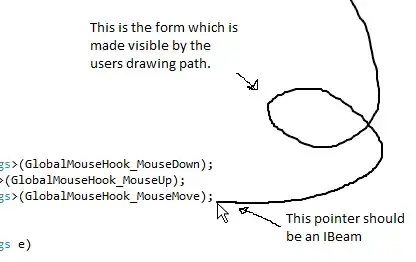

But inside NoSQL Workbench, I couldn't see the table I created and seeded when connecting as shown below. There weren't connection errors; the table just isn't there. It doesn't seem related to the bugginess issue described here, as restarting the application did not help. I didn't change any AWS account settings like region, keys, etc.