I am trying to do 3D reconstruction using SFM (Structure From Motion). I am pretty new to computer vision and doing this as a hobby, so if you use acronyms please also let me know what it stands for so I can look it up.

Learning wise, I have been following this information :

- https://www.youtube.com/watch?v=SyB7Wg1e62A&list=PLgnQpQtFTOGRYjqjdZxTEQPZuFHQa7O7Y&ab_channel=CyrillStachniss

- https://imkaywu.github.io/tutorials/sfm/#triangulation

- Plus links below from quick question.

My end goal is to use this on persons face, to create a 3D face reconstruction. If people have advice on this topic specifically please let me know as well.

I do the following steps :

- IO using OpenCV. A video taken using a single camera.

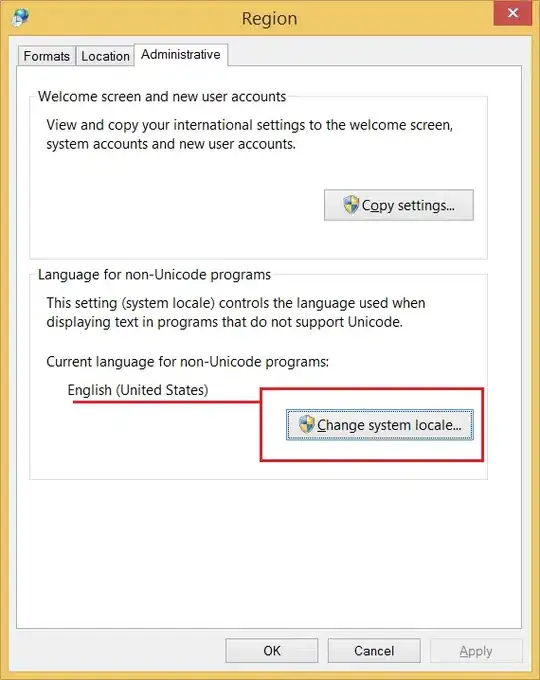

- Find intrinsic parameters and distortion coefficients of the camera using Zhangs method.

- Use SIFT to find features from frame 1 and frame 2.

- Feature matching is done using

cv2.FlannBasedMatcher(). - Compute essential matrix using

cv2.findEssentialMat(). - Projection matrix of frame 1 is set to

numpy.hstack((numpy.eye(3), numpy.zeros((3, 1)))) - Rotation and Translation are obtained using

cv2.recoverPose(). - Using Rotation and Translation we get the Projection Matrix of frame 2

curr_proj_matrix = cv2.hconcat([curr_rotation_matrix, curr_translation_matrix]). - I use

cv2.undistortPoints()on feature pts for frame 1 and 2, using information from step 2. - Lastly, I do triangulation

points_4d = triangulation.triangulate(prev_projection_matrix, curr_proj_matrix, prev_pts_u, curr_pts_u) - Then I reassign prev values to be equal curr values and continue through the video.

- I use matplotlib to display the scatter plot.

Quick Question :

- Why do some articles do E = (K^-1)T * F * K and some E = (K)T * F * K.

First way : What do I do with the fundamental matrix?

Second way : https://harish-vnkt.github.io/blog/sfm/

Issue :

As you can see the scatter plot looks a bit warped, I am unsure why, or if I am missing a step, or doing something wrong. Hence looking for advise. Also the Z axis, is all negative.

One of the guesses I had, was that the video is in 60 FPS and even though I am moving the camera relatively quickly, it might not be enough of the rotation + translation to determine the triangulation. However, removing frames in between, did not make much difference.

Please let me know if you would like me to provide some of the code.