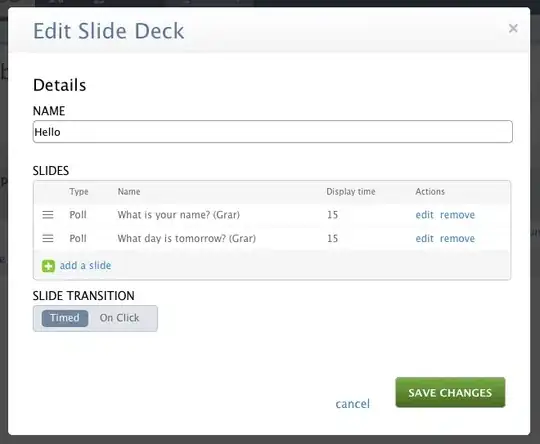

Update #2: I have checked the health status of my instances within the auto scaling group - here the instances are titled as "healthy". (Screenshot added)

I followed this trouble-shooting tutorial from AWS - without success:

Solution: Use the ELB health check for your Auto Scaling group. When you use the ELB health check, Auto Scaling determines the health status of your instances by checking the results of both the instance status check and the ELB health check. For more information, see Adding health checks to your Auto Scaling group in the Amazon EC2 Auto Scaling User Guide.

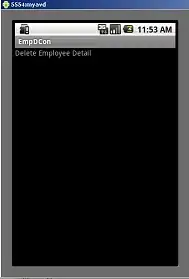

Update #1: I found out that the two Node-Instances are "OutOfService" (as seen in the screenshots below) because they are failing the Healtcheck from the loadbalancer - could this be the problem? And how do i solve it?

Thanks!

I am currently on the home stretch to host my ShinyApp on AWS.

To make the hosting scalable, I decided to use AWS - more precisely an EKS cluster.

For the creation I followed this tutorial: https://github.com/z0ph/ShinyProxyOnEKS

So far everything worked, except for the last step: "When accessing the load balancer address and port, the login interface of ShinyProxy can be displayed normally.

The load balancer gives me the following error message as soon as I try to call it with the corresponding port: ERR_EMPTY_RESPONSE.

I have to admit that I am currently a bit lost and lack a starting point where the error could be.

I was already able to host the Shiny sample application in the cluster (step 3.2 in the tutorial), so it must be somehow due to shinyproxy, kubernetes proxy or the loadbalancer itself.

I link you to the following information below:

- Overview EC2 Instances (Workspace + Cluster Nodes)

- Overview Loadbalancer

- Overview Repositories

- Dockerfile ShinyProxy

- Dockerfile Kubernetes Proxy

- Dockerfile ShinyApp (sample application)

I have painted over some of the information to be on the safe side - if there is anything important, please let me know.

If you need anything else I haven't thought of, just give me a hint!

And please excuse the confusing question and formatting - I just don't know how to word / present it better. sorry!

Many thanks and best regards

Overview EC2 Instances (Workspace + Cluster Nodes)

Overview Loadbalancer

Overview Repositories

Dockerfile ShinyProxy (source https://github.com/openanalytics/shinyproxy-config-examples/tree/master/03-containerized-kubernetes)

Dockerfile Kubernetes Proxy (source https://github.com/openanalytics/shinyproxy-config-examples/tree/master/03-containerized-kubernetes - Fork)

Dockerfile ShinyApp (sample application)

The following files are 1:1 from the tutorial:

- application.yaml (shinyproxy)

- sp-authorization.yaml

- sp-deployment.yaml

- sp-service.yaml

Health-Status in the AutoScaling-Group