After mounting the storage account, please do run this command do check if you have data access permissions to the mount point created.

dbutils.fs.ls("/mnt/<mount-point>")

- If you have data access - you will see the files inside the storage

account.

- Incase if you don't have data access- you will get this error - "This request is not authorized to perform this operation using this permissions", 403.

If you are able to mount the storage but unable to access, check if the ADLS2 account has the necessary roles assigned.

I was able to repro the same. Since you are using Azure Active Directory application, you would have to assign "Storage Blob Data Contributor" role to Azure Active Directory application too.

Below are steps for granting blob data contributor role on the registered application

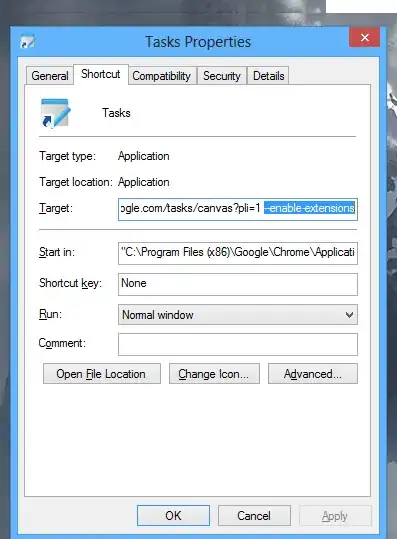

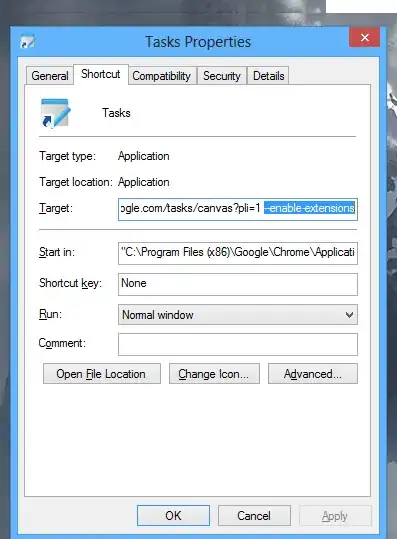

1. Select your ADLS account. Navigate to Access Control (IAM). Select Add role assignment.

2. Select the role Storage Blob Data Contributor, Search and select your registered Azure Active Directory application and assign.

Back in Access Control (IAM) tab, search for your AAD app and check access.

3. Run dbutils.fs.ls("/mnt/<mount-point>") to confirm access.