I am following this book and I am trying to visualize the network. This part seems tricky to me and I am trying to get my head around it by visualizing it:

import numpy as np

import nnfs

from nnfs.datasets import spiral_data

nnfs.init()

class Layer_Dense:

# Layer initialization

def __init__(self, n_inputs, n_neurons):

# Initialize weights and biases

self.weights = 0.01 * np.random.randn(n_inputs, n_neurons)

self.biases = np.zeros((1, n_neurons))

# Forward pass

def forward(self, inputs):

# Calculate output values from inputs, weights and biases

self.output = np.dot(inputs, self.weights) + self.biases

# ReLU activation

class Activation_Relu():

# forward pass

def forward(self, inputs):

# calculate output values from inputs

self.output = np.maximum(0,inputs)

create dense layer with 2 input features and 3 output values

dense1 = Layer_Dense(2, 3)

# create ReLU activation which will be used with Dense layer

activation1 = Activation_Relu()

# create second dense layer with 3 input features from the previous layer and 3 output values

dense2 = Layer_Dense(3,3)

# create dataset

X, y = spiral_data(samples = 100, classes = 3)

dense1.forward(X)

activation1.forward(dense1.output)

dense2.forward(activation1.output)

My input data X is an array of 300 rows and 2 columns, meaning each of my 300 inputs will have 2 values that describes it.

The Layer_Dense class is initialized with parameters (2, 3) meaning that there are 2 inputs and 3 neurons.

At the moment my variables look like this:

X.shape # (300, 2)

x[:5]

# [[ 0. , 0. ],

# [ 0.00279763, 0.00970586],

# [-0.01122664, 0.01679536],

# [ 0.02998079, 0.0044075 ],

# [-0.01222386, 0.03851056]]

dense1.weights.shape

# (2, 3)

dense1.weights

# [[0.00862166, 0.00508044, 0.00461094],

# [0.00965116, 0.00796512, 0.00558731]])

dense1.biases

# [[0., 0., 0.]]

dense1.output.shape

(300, 3)

print(dense1.output[:5])

# [[0.0000000e+00 0.0000000e+00 0.0000000e+00]

# [8.0659374e-05 4.3710388e-05 6.5012209e-05]

# [1.5923499e-04 6.9124777e-05 1.0470775e-04]

# [2.3033096e-04 1.9152602e-04 2.7749798e-04]

# [1.9318146e-04 3.1980115e-04 4.5189835e-04]]

Does this configuration make my network look like so:

Where each of 300 inputs has 2 features

Or like so:

Do I understand this correctly:

- There are 300 inputs with 2 features each

- Each input is connected to 3 neurons in first layer, since its connected to 3 neurons there are 3 weights

- Why the shape of weights is (2, 3) instead of (300, 3) since there are 300 inputs with 2 features each, each feature connected to 3 neurons

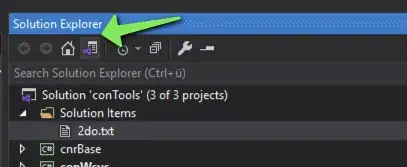

I have used this to draw networks.