Direct Answer

- Yes the server can send any kind of data (anything is just a binary consecution of numbers, even text and characters). It is the client which tries to make sense of the content, usually following certain standards.

- If you don't need to manipulate the image I suggest you to avoid any request since the browser can handle it for you. Note that only

fetch is hardly subjected to CORS because it does not send cross-origin cookies (see MDN - using fetch). If you need more control you can use XMLHTTPRequest. The usage of blob is inherited from old web approaches, those times where ArrayBuffer were not invented, and it was the only wrapper for binary data in the web environments. It is still supported for many reason.

- Actually less than you think (just 1)! Check explanation...

- Image resources are widely dependent on what kind of processing you need... Just displaying an image? MDN and CSS-tricks are full of tips, you just need to search for them. If you want to process an image instead, you need to take a further look to canvas elements and the usual resources are scarce or almost about game making (for obviously reasons), MDN and something in CSS-tricks for resources.

Explanation

What is an image?

I think you have a bias toward the concept of a browser displaying an image.

So what a image really is? binary data.

Pratically there is only one way for your browser (or your computer in general) to display an image, that is to have a byte array that is a flatten view of the pixels of your image. So at the end of the day you will ALWAYS need to feed your broswer with binary data that is interpretable as a raw image (usually a set of rgb(a) pixels).

Yes, there is only "ONE" way to display an image on a computer. But there are different ways we can represent that image.

Encoding

At low level, different computers represent numbers in different ways, so the web standards decided to represent images in so called RGB8 or RGBA8 encoding (Red-Green-Blue(-Alpha)-NumBits, 8 bits = 1 Byte). This means that each pixel is represented by an array of 4 bytes (numbers), each varying from 0 to 255. This array is the only thing your browser see as image.

At the end your image is something like this:

// using color(x, y) to describe the image pixels

[ red(0, 0), green(0, 0), blue(0, 0), alpha(0, 0), red(1, 0), ... ] =

[ 124, 12, 123, 255, 122, ... ]

Now that you can see an image as a linear array of pixels, we can decide how to write it down on a piece of paper (our "code"). The browser (usually and historically) parse every packet sent on the web in an HTML file as plain text, so we must use characters to describe our image, the standard is to ONLY use UTF-8 (character encoding, superset of ASCII). We can write it in JS as an array of numbers for example.

But take a look to the number 255. Each time you send that number on the newtork you are sending 3 characters: '2', '5', '5'. Web comunicates only with characters so... Is there a way to make a compact representation of that number in order to send less bytes as possible (saving those guys who have slow connection!)?

Base64 is the most famous encoding used to describe that linear array in the most compact way, because it compress the 255 characters into just 1 or 2 characters (depending on the sequence). Instead of representing number in base of 10, we can take rid of some characters we usually use as letters to represent more digits. So '11' become 'a', '12' => 'b', '13' => 'c', ..., '32' => 'a', ..., '63' => 'Z', '64' => '10', '65' => '11', ..., '128' => '20', and so on. Furthermore this algorithm exploit more low level representation to encode more digits in one single character (that's why u will see some '=' at the end sometimes).

Take a look on different representation of the same image:

// JavaScript Array

[ /* pixel 1 */ 124, 12, 123, 255, /* pixel 2 */ 122, 12, 56, 255 ] // 67 characters

// (30 without spaces and comments)

// Base64

fAx7w796DDjDvw== // 16 characters

// Base32

3sc3r7v3qc1o7vAb== // 18 characters (always >= Base64)

It's easy to see why they choose base64 as common algorithm (and this example counts just for 2 pixels).

(Base32 image example)

Formats

Now imagine to send a 4K image on the web, which has a dimension of 3'656 × 2'664. This means that you are sending on the internet 9'739'584 of pixels, with 4 bytes each, for a grand total of 38'958'336 bytes (~39MB). Furthermore imagine what a waste if the image is completely black (we can describe the whole image just with one pixel)... That's too much (especially for low connections), for this reason they invented some algorithms which can describe the image in a more compact way, we call them image format. PNG and JPEG/JPG are example of formats which compress the image (jpg 4k image ~8MB, png 4k image can vary from ~2MB to ~22MB depending on certain parameters and the image itself).

Someone keep the compression thing to a further level, enstabilishing the gzip compression standard format (a generic compression algorithm over an already compressed image, or any other kind of file).

Drawing on the Browser

At the end of this journey you have just two different ways browsers allow you to draw content: URI and ArrayBuffer.

URI: you can use it with <img> and css, by setting src property of the element or by setting any style property which can get an image URL as input.ArrayBuffer: by manipulating the <canvas>.context buffer (that is just the linear array we discussed above)

Obviously browsers allow also to convert or switch between the two ways.

URI

URI are the way we define a certain content, that can be a stored resource (URL - all protocols but data, for example http(s)://, ws(s):// or file://) or a properly buffer described by a string (the data protocol).

When you ask for an image, by setting the src property, your browser parses the URL and, if it is a remote content, makes a request to retrieve it and parse the content in the proper way (format & encoding). In the same way, when you make a fetch call you are asking the browser to request the remote content; the fetch function has the possibility to get the response in different ways:

- textual, just a bunch of characters (usually used to parse JSON/DOM/XML)

- binary data, divided in:

ArrayBuffer, which is a representation of the linear array of the image, we discussed aboveBlob, which is an abstract representation of a generic file-like object (which also encapsulate an internal ArrayBuffer). The Blob is something like a pointer to a file-like entity in the browser cache, so you don't need to download/request the same file multiple times.

// ArrayBuffer from fetch:

fetch(myRequest).then(function(response) {

return response.arrayBuffer();

})

// Blob from fetch

fetch(myRequest).then(function(response) {

return response.blob();

})

// ArrayBuffer from Blob

blob.arrayBuffer();

So now you have to tell to the browser how to make sense of the content you get back from the response. You need to convert the data to a parsable url:

var encodedURI = `data:${format};${encoding},` + encodeBuffer(new Uint8Array(arrayBuffer));

image.src = encodedURI

// for base64 encoding

var encodeBuffer = function(buffer) { return window.btoa(String.fromCharCode.apply(null, buffer)); }

// for blobs

image.src = (window.URL || window.webkitURL).createObjectURL(blob);

Note that browsers supports other encodings than just base64, also base32 is available but, as we saw above, is not so convinient to use. Also there is no builtin function like btoa to encode a buffer in base32.

Note also that the format value can be any kind of MIME type such as image/png|jpg|gif|svg or text/plain|html|xml, application/octet, etc.. Obviously only image types are then shown as images.

When the resource is not requested from a remote server (with a file:// or data protocol) the image is usually loaded syncronously, so as soon you set the URL, the browser will read, decode and put the buffer to display in your image. This has two consequences:

- The data is managed locally (no internet connection requirements)

- The data is treated synchronously, so if the image is big your computer will stuck into the processing until the end (see why it is a bad practice to use

data protocol for videos or huge data in the special section, at the end)

URL vs URI

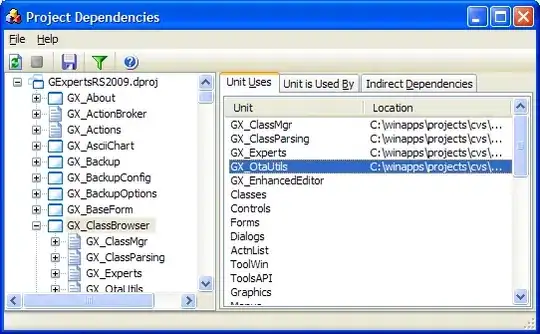

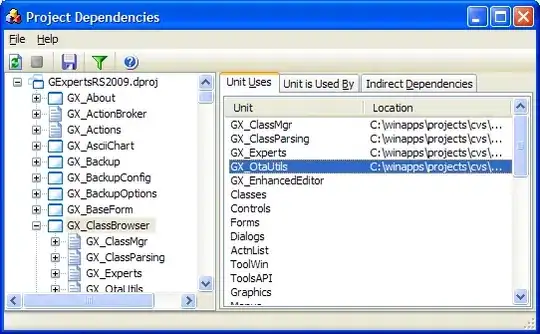

URI is a generic identifier for a resource, URL is an identifier for a location where to retrieve the resource. Usually in a browser context are almost an overlapped concept, I found this image explain better than thousand words:

The data is pratically an URI, every request with a protocol is actually an URL

Side Note

In your question you write this as an alternative method by "setting the image element url":

fetch(imageUrl)

.then((response)=>response.blob())

.then((blob)=>{

const objectUrl = URL.createObjectURL(blob)

document.querySelector("#myImage").src = objectUrl; // <-- setting url!

})

But watch out: you actually setting an image element source URL!

Canvas

The <canvas> element gives you the full control over an image buffer, also to further process it. You can literally draw your array in it:

var canvas = document.getElementById('mycanvas');

// or offline canvas:

var canvas = document.createElement('canvas');

canvas.width = myWidth;

canvas.height = myHeight;

var context = canvas.getContext('2d');

// exemple from array buffer

var arrayBuffer = /* from fetch or Blob.arrayBuffer() or also with new ArrayBuffer(size) */

var buffer = new Uint8ClampedArray(arrayBuffer);

var imageData = new ImageData(buffer, width, height);

context.putImageData(iData, 0, 0);

// example image from array (2 pixels)

var data = [

// R G B A

255, 255, 255, 255, // white pixel

255, 0, 0, 255 // red pixel

];

var buffer = new Uint8ClampedArray(data);

var imageData = new ImageData(buffer, 2, 1);

context.putImageData(iData, 0, 0);

(Note ImageData wants a RGBA array)

To get back the ArrayBuffer (which you can also plug back in the image.src after) you can do:

var imageData = context.getImageData(0, 0, canvas.width, canvas.heigth);

var buffer = imageData.data; // Uint8ClampedArray

var arrayBuffer = buffer.buffer; // ArrayBuffer

This is an example on how to process an image:

// reading image

var image = document.getElementById('myimage');

image.onload = function() {

// load image in canvas

context.drawImage(image, 0, 0);

// process your image

context.fillRect(20, 20, 150, 100);

var imageData = context.getImageData(0, 0, canvas.width, canvas.height);

imageData.data[0] = 255;

// converting back to base64 url

var resultUrl = window.btoa(String.fromCharCode.apply(null, imageData.data.buffer));

// setting image url and disabling onload

image.onload = null;

image.src = resultUrl;

};

// note src setted after onload

image.src = 'ANY-URL';

For this part I suggest you to take a look to Canvas Tutorial - MDN

SPECIAL

Audio and Video are treated in the same way, but you must encode and format also the time and sound dimension in some way. You can load a audio/video from base64 string (not so good idea for videos) or display a video on a canvas