I set docker memory correct - use 50GB but using only 12.64 isolation - a process

Where I made mistake?

demon.json

{

"registry-mirrors": [],

"insecure-registries": [],

"debug": true,

"experimental": false,

"storage-opt": [ "dm.basesize=40G" ],

"hosts": ["tcp://10.0.0.32:2376", "npipe://"]

}

kill moment

using

using Docker.DotNet;

using Docker.DotNet.Models;

set memory

return await client.Containers.CreateContainerAsync(

new CreateContainerParameters

{

Env = environmentVariables,

Name = containerName,

Image = imageName,

ExposedPorts = new Dictionary<string, EmptyStruct>

{

{

"80", default(EmptyStruct)

}

},

HostConfig = new HostConfig

{

Memory = containerMemory,

Isolation = "process", //Memory = containerMemory,

CPUCount = numberOfCores,

PortBindings = new Dictionary<string, IList<PortBinding>>

{

{

"80",

new List<PortBinding>

{

new PortBinding { HostPort = port.ToString(CultureInfo.InvariantCulture) }

}

}

},

PublishAllPorts = true

}

}).ConfigureAwait(false);

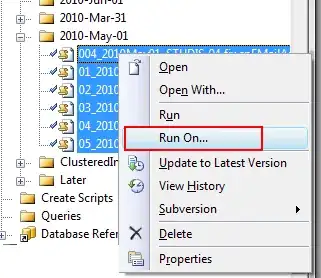

In the new docker, I can not set a memory ram limit for the machine. I think the resources in the comments are much older than the current docker version.