I am using a pre-trained BERT sentence transformer model, as described here https://www.sbert.net/docs/training/overview.html , to get embeddings for sentences.

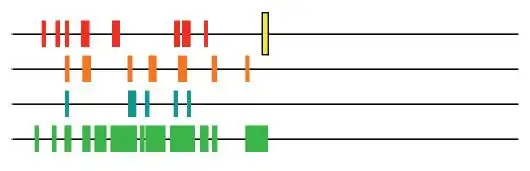

I want to fine-tune these pre-trained embeddings, and I am following the instructions in the tutorial i have linked above. According to the tutorial, you fine-tune the pre-trained model by feeding it sentence pairs and a label score that indicates the similarity score between two sentences in a pair. I understand this fine-tuning happens using the architecture shown in the image below:

Each sentence in a pair is encoded first using the BERT model, and then the "pooling" layer aggregates (usually by taking the average) the word embeddings produced by Bert layer to produce a single embedding for each sentence. The cosine similarity of the two sentence embeddings is computed in the final step and compared against the label score.

My question here is - which parameters are being optimized when fine-tuning the model using the given architecture? Is it fine-tuning only the parameters of the last layer in BERT model? This is not clear to me by looking at the code example shown in the tutorial for fine-tuning the model.