I found this fast script here in Stack Overflow for perform PCA with a given numpy array.

I don't know how to plot this in 3D, and also plot in 3D the Cumulative Explained Variances and the Number of Components. This fast script was perform with covariance method, and not with singular value decomposition, maybe that's the reason why I can't get my Cumulative Variances?

I tried to plotting with this, but it doesn't work.

This is the code and my output:

from numpy import array, dot, mean, std, empty, argsort

from numpy.linalg import eigh, solve

from numpy.random import randn

from matplotlib.pyplot import subplots, show

def cov(X):

"""

Covariance matrix

note: specifically for mean-centered data

note: numpy's `cov` uses N-1 as normalization

"""

return dot(X.T, X) / X.shape[0]

# N = data.shape[1]

# C = empty((N, N))

# for j in range(N):

# C[j, j] = mean(data[:, j] * data[:, j])

# for k in range(j + 1, N):

# C[j, k] = C[k, j] = mean(data[:, j] * data[:, k])

# return C

def pca(data, pc_count = None):

"""

Principal component analysis using eigenvalues

note: this mean-centers and auto-scales the data (in-place)

"""

data -= mean(data, 0)

data /= std(data, 0)

C = cov(data)

E, V = eigh(C)

key = argsort(E)[::-1][:pc_count]

E, V = E[key], V[:, key]

U = dot(data, V)

print(f'Eigen Values: {E}')

print(f'Eigen Vectors: {V}')

print(f'Key: {key}')

print(f'U: {U}')

print(f'shape: {U.shape}')

return U, E, V

data = dftransformed.transpose() # df tranpose and convert to numpy

trans = pca(data, 3)[0]

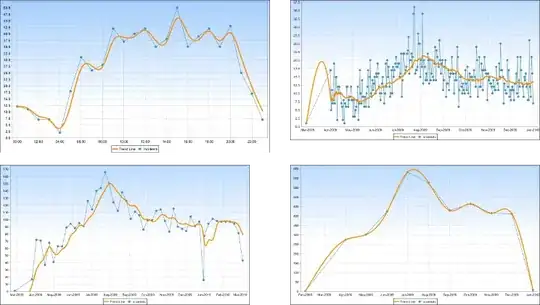

fig, (ax1, ax2) = subplots(1, 2)

ax1.scatter(data[:50, 0], data[:50, 1], c = 'r')

ax1.scatter(data[50:, 0], data[50:, 1], c = 'b')

ax2.scatter(trans[:50, 0], trans[:50, 1], c = 'r')

ax2.scatter(trans[50:, 0], trans[50:, 1], c = 'b')

show()

I understand the eigen values & eigen vectors, but I can't understand this key value, the user didn't comment this section of code in the answer, anyone knows what means each variable printed?

output:

Eigen Values: [126.30390621 68.48966957 26.03124927]

Eigen Vectors: [[-0.05998409 0.05852607 -0.03437937]

[ 0.00807487 0.00157143 -0.12352761]

[-0.00341751 0.03819162 0.08697668]

...

[-0.0210582 0.06601974 -0.04013712]

[-0.03558994 0.02953385 0.01885872]

[-0.06728424 -0.04162485 -0.01508154]]

Key: [439 438 437]

U: [[-12.70954048 8.97405411 -2.79812235]

[ -4.90853527 4.36517107 0.54129243]

[ -2.49370123 0.48341147 7.26682759]

[-16.07860635 6.16100749 5.81777637]

[ -1.81893291 6.48443689 -5.8655646 ]

[ 9.03939039 2.64196391 4.22056618]

[-14.71731064 9.19532016 -2.79275543]

[ 1.60998654 8.37866823 0.86207034]

[ -4.4503797 10.12688097 -5.12453656]

[ 12.16293556 2.2594413 -2.11730311]

[-15.76505125 9.48537581 -2.73906772]

[ -2.54289959 9.86768111 -4.84802992]

[ -5.78214902 9.21901651 -8.13594627]

[ -1.35428398 5.85550586 6.30553987]

[ 12.87261987 0.96283606 -3.26982121]

[ 24.57767477 -4.28214631 6.29510659]

[ 4.13941679 3.3688288 3.01194055]

[ -2.98318764 1.32775227 7.62610929]

[ -4.44461549 -1.49258339 1.39080386]

[ -0.10590795 -0.3313904 8.46363066]

[ 6.05960739 1.03091753 5.10875657]

[-21.27737352 -3.44453629 3.25115921]

[ -1.1183025 0.55238687 10.75611405]

[-10.6359291 7.58630341 -0.55088259]

[ 4.52557492 -8.05670864 2.23113833]

[-11.07822559 1.50970501 4.66555889]

[ -6.89542628 -19.24672805 -3.71322812]

[ -0.57831362 -17.84956249 -5.52002876]

[-12.70262277 -14.05542691 -2.72417438]

[ -7.50263129 -15.83723295 -3.2635125 ]

[ -7.52780216 -17.60790567 -2.00134852]

[ -5.34422731 -17.29394266 -2.69261597]

[ 9.40597893 0.21140292 2.05522806]

[ 12.12423431 -2.80281266 7.81182024]

[ 19.51224195 4.7624575 -11.20523383]

[ 22.38102384 0.82486072 -1.64716468]

[ -8.60947699 4.12597477 -6.01885407]

[ 9.56268414 1.18190655 -5.44074124]

[ 14.97675455 3.31666971 -3.30012109]

[ 20.47530869 -1.95896058 -1.91238615]]

shape: (40, 3)