A lot more goes under the hood to achieve this amalgamation between java and python within spark.

Primarily its a version compatibility issue between the different jars. Ensuring consistency towards different components can be your starting point to tackle issues like these

Hadoop Version

Navigate to the location where spark is installed , ensuring consistent versions for *hadoop* is the first step towards spark

[ vaebhav@localhost:/usr/local/Cellar/apache-spark/3.1.2/libexec/jars - 10:39 PM ]$ ls -lthr *hadoop-*

-rw-r--r-- 1 vaebhav root 79K May 24 10:15 hadoop-yarn-server-web-proxy-3.2.0.jar

-rw-r--r-- 1 vaebhav root 1.3M May 24 10:15 hadoop-yarn-server-common-3.2.0.jar

-rw-r--r-- 1 vaebhav root 221K May 24 10:15 hadoop-yarn-registry-3.2.0.jar

-rw-r--r-- 1 vaebhav root 2.8M May 24 10:15 hadoop-yarn-common-3.2.0.jar

-rw-r--r-- 1 vaebhav root 310K May 24 10:15 hadoop-yarn-client-3.2.0.jar

-rw-r--r-- 1 vaebhav root 3.1M May 24 10:15 hadoop-yarn-api-3.2.0.jar

-rw-r--r-- 1 vaebhav root 84K May 24 10:15 hadoop-mapreduce-client-jobclient-3.2.0.jar

-rw-r--r-- 1 vaebhav root 1.6M May 24 10:15 hadoop-mapreduce-client-core-3.2.0.jar

-rw-r--r-- 1 vaebhav root 787K May 24 10:15 hadoop-mapreduce-client-common-3.2.0.jar

-rw-r--r-- 1 vaebhav root 4.8M May 24 10:15 hadoop-hdfs-client-3.2.0.jar

-rw-r--r-- 1 vaebhav root 3.9M May 24 10:15 hadoop-common-3.2.0.jar

-rw-r--r-- 1 vaebhav root 43K May 24 10:15 hadoop-client-3.2.0.jar

-rw-r--r-- 1 vaebhav root 136K May 24 10:15 hadoop-auth-3.2.0.jar

-rw-r--r-- 1 vaebhav root 59K May 24 10:15 hadoop-annotations-3.2.0.jar

-rw-r--r--@ 1 vaebhav root 469K Oct 9 00:30 hadoop-aws-3.2.0.jar

[ vaebhav@localhost:/usr/local/Cellar/apache-spark/3.1.2/libexec/jars - 10:39 PM ]$

For Further 3rd party connectivity like S3 , you can check the corresponding compile dependency from MVN Repository by searching for the respective jar , in your case - hadoop-aws-2.7.3.jar

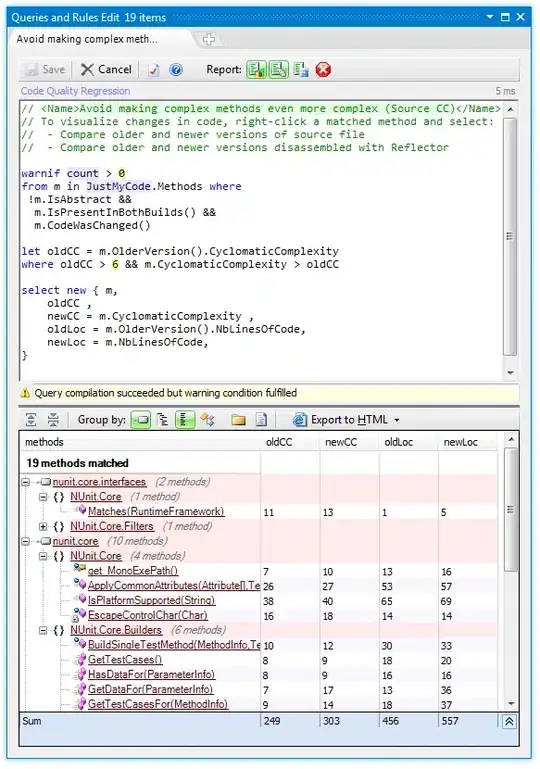

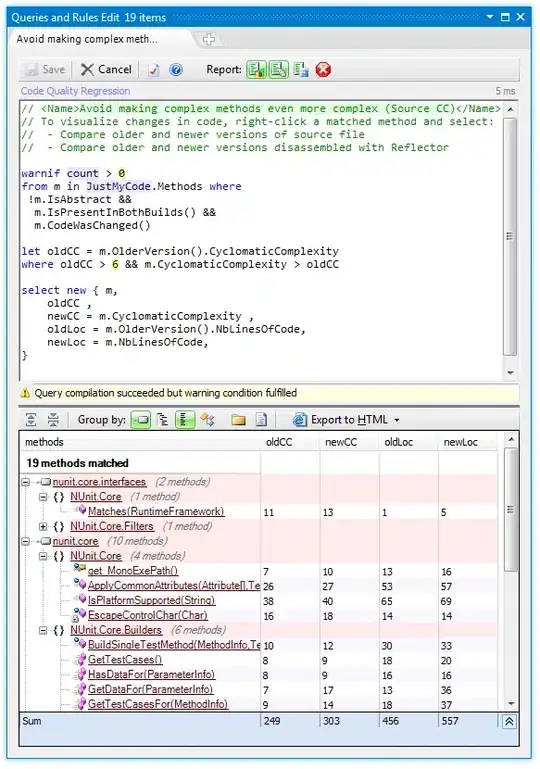

MVN Compile Dependency

By searching the respective artifact under mvn repository , one should check the respective aws jdk jar under compile dependency

These check points can be your entry point to ensure correct dependencies are ensured

After the dependencies are sorted , there are additional steps for S3 connectivity

PySpark S3 Example

Currently AWS SDK supports s3a or s3n , I have demonstrated how to establish s3a, the later one is fairly easy to implement as well

Difference between the implementations can be found in this brilliant answer

from pyspark import SparkContext

from pyspark.sql import SQLContext

import configparser

sc = SparkContext.getOrCreate()

sql = SQLContext(sc)

hadoop_conf = sc._jsc.hadoopConfiguration()

config = configparser.ConfigParser()

config.read(os.path.expanduser("~/.aws/credentials"))

access_key = config.get("<aws-account>", "aws_access_key_id")

secret_key = config.get("<aws-account>", "aws_secret_access_key")

session_key = config.get("<aws-account>", "aws_session_token")

sc._jsc.hadoopConfiguration().set("fs.s3a.endpoint", "s3.amazonaws.com")

sc._jsc.hadoopConfiguration().set("fs.s3a.connection.ssl.enabled", "true")

sc._jsc.hadoopConfiguration().set("fs.s3a.impl", "org.apache.hadoop.fs.s3a.S3AFileSystem");

hadoop_conf.set("fs.s3a.aws.credentials.provider", "org.apache.hadoop.fs.s3a.TemporaryAWSCredentialsProvider")

hadoop_conf.set("fs.s3a.access.key", access_key)

hadoop_conf.set("fs.s3a.secret.key", secret_key)

hadoop_conf.set("fs.s3a.session.token", session_key)

s3_path = "s3a://<s3-path>/"

sparkDF = sql.read.parquet(s3_path)