I believe with Matillion ETL on Snowflake Partner Connect, you always get an AWS-hosted instance. So you won't find an Azure Blob Storage Load component like you would if you launched an Azure-hosted instance of Matillion ETL directly via Matillion themselves.

Instead you will need to follow two steps:

- Use a Data Transfer component to copy the file(s) from Azure Blob Storage into an AWS S3 bucket

- Use an S3 Load component to COPY the data from S3 into Snowflake

The Data Transfer component has to authenticate into Azure somehow, so there's one prerequisite (which it looks like you have started already)

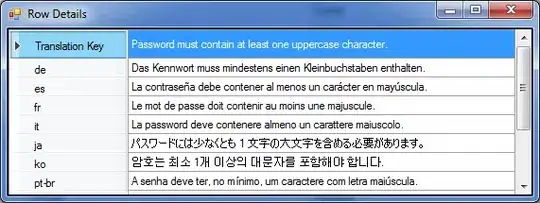

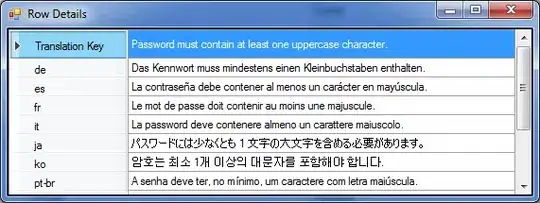

Go to the Project / Manage Credentials dialog, and create a new Azure User-Defined Credential ...

... setting

- Tenant ID: You can find from Azure Active Directory and look in Basic Information

- Client ID: From your App Registration

- Secret Key: From "Certificates & secrets" inside your App Registration

- The Encryption Type refers to the way Matillion ETL stores the password. You can use Encoded, or KMS if you have a Master Key.

You must make sure that you get a "Blob Storage: success" message when pressing Test. It looks like that's the step you got stuck at.

The test will only pass if you have given Contributor access to at least one Storage Account. You can do this from the Azure console under Storage accounts / your storage account / Access Control (IAM) / Grant access to this resource / Add Role Assignment

Grant Contributor access to the App Registration identified by the above credentials.

After setting up the new Azure User-Defined Credential, in the Matillion ETL UI you then need to go to your Environment (bottom left) and set the Azure Credentials to your new Credentials, like this.

Once that is done, create a Data Transfer component

- set the Source Type to Azure Blob Storage and press the browse button on the Blob Location property. It should list all the Storage Accounts to which the App Registration has been granted access (there is only one in the screenshot below)

- find the blob you wish to load

- set the Target Type to S3, and choose a Target Object Name and a Target URL

Run the Data Transfer component to copy the file from Azure storage into S3 storage. After that you will be able to use an S3 Load component to copy the data from S3 into Snowflake.