After updating the environment from Wildfly 13 to Wildfly 18.0.1 we experienced an

A channel event listener threw an exception: java.lang.OutOfMemoryError: Direct buffer memory

at java.base/java.nio.Bits.reserveMemory(Bits.java:175)

at java.base/java.nio.DirectByteBuffer.<init>(DirectByteBuffer.java:118)

at java.base/java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:317)

at org.jboss.xnio@3.7.3.Final//org.xnio.BufferAllocator$2.allocate(BufferAllocator.java:57)

at org.jboss.xnio@3.7.3.Final//org.xnio.BufferAllocator$2.allocate(BufferAllocator.java:55)

at org.jboss.xnio@3.7.3.Final//org.xnio.ByteBufferSlicePool.allocateSlices(ByteBufferSlicePool.java:162)

at org.jboss.xnio@3.7.3.Final//org.xnio.ByteBufferSlicePool.allocate(ByteBufferSlicePool.java:149)

at io.undertow.core@2.0.27.Final//io.undertow.server.XnioByteBufferPool.allocate(XnioByteBufferPool.java:53)

at io.undertow.core@2.0.27.Final//io.undertow.server.protocol.http.HttpReadListener.handleEventWithNoRunningRequest(HttpReadListener.java:147)

at io.undertow.core@2.0.27.Final//io.undertow.server.protocol.http.HttpReadListener.handleEvent(HttpReadListener.java:136)

at io.undertow.core@2.0.27.Final//io.undertow.server.protocol.http.HttpReadListener.handleEvent(HttpReadListener.java:59)

at org.jboss.xnio@3.7.3.Final//org.xnio.ChannelListeners.invokeChannelListener(ChannelListeners.java:92)

at org.jboss.xnio@3.7.3.Final//org.xnio.conduits.ReadReadyHandler$ChannelListenerHandler.readReady(ReadReadyHandler.java:66)

at org.jboss.xnio.nio@3.7.3.Final//org.xnio.nio.NioSocketConduit.handleReady(NioSocketConduit.java:89)

at org.jboss.xnio.nio@3.7.3.Final//org.xnio.nio.WorkerThread.run(WorkerThread.java:591)

Nothing was changed on the application side. I looked at the Buffer pools and it seems that some resources are not freed. I triggered several manual GCs but nearly nothing happens. (Uptime 2h)

Before in the old configuration it looked like this (Uptime >250h):

Now I did a lot of research and the closest thing I could find is this post here on SO. However this was in combination with websockets but there are no websockets in use. I read several (good) articles (1,2,3,4,5,6) and watched this video about the topic. The following things I tried but nothing had any effect:

- The OutOfMemoryError occurred at 5GB since the heap is 5GB => I reduced the MaxDirectMemorySize to 512m and then 64m but then the OOM just occurs quicker

- I set

-Djdk.nio.maxCachedBufferSize=262144 - I checked the number of IO workers: 96 (6cpus*16) which seems reasonable. The system has usually short lived threads (largest pool size was 13). So it could not be the number of workers I guess

- I switched back to ParallelGC since this was default in Java8. Now when doing a manual GC at least 10MB are freed. For GC1 nothing happens at all. But still both GCs cannot clean up.

- I removed the

<websockets>from the wildfly configuration just to be sure - I tried to emulate it locally but failed.

- I analyzed the heap using eclipseMAT and JXRay but it just points to some internal wildfly classes.

- I reverted Java back to version 8 and the system still shows the same behavior thus wildfly is the most probable suspect.

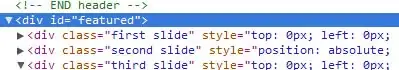

In eclipseMAT one could also find these 1544 objects. They all got the same size.

The only thing what did work was to deactivate the bytebuffers in wildfly completely.

/subsystem=io/buffer-pool=default:write-attribute(name=direct-buffers,value=false)

However from what I read this has a performance drawback?

So does anyone know what the problem is? Any hints for additional settings / tweaks? Or was there a known Wildfly or JVM bug related to this?

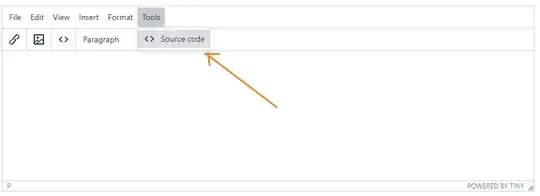

Update 1: Regarding the IO threads - maybe the concept is not 100% clear to me. Because there is the ioThreads value

And there are the threads and thread pools.

And there are the threads and thread pools.

From the definition one could think that per worker thread the number of ioThreads is created (in my case 12)? But still the number of threads / workers seems quite low in my case...

Update 2: I downgraded java and it still shows the same behavior. Thus I suspect wildfly to be cause of the problem.