I am trying to understand the SHAP values of the KernelExplainer for a simple classification problem (for example, the iris dataset).

I want to explain the importance of features per sample. From the shape of the shape_values, I see that the importance is calculated for every sample.

Below I trained a RandomForestClassifier and explained the test set using SHAP. I used the force_plot method of SHAP to visualize the importance for every class for one sample.

My question is now, how can I interpret these force_plots? Including what do the expected_values mean in the case of a classification? What is the meaning of the bold values in the plots (for a Regression problem, this would be the predicted value)?

I have searched the internet and found the below thread, however, it didn't really answer my questions.

https://github.com/slundberg/shap/issues/367

import shap

import matplotlib.pyplot as plt

import pandas as pd

from sklearn.datasets import load_iris

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

iris = load_iris()

X_train, X_test, y_train, y_test = train_test_split(

iris.data, iris.target, random_state=0

)

X_test = pd.DataFrame(X_test, columns=iris.feature_names)

y_test = pd.DataFrame(y_test)

# train a classifier

model = RandomForestClassifier(random_state=0).fit(X_train, y_train)

# calculate the SHAP values

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X_test)

# print the `expected_values`

print(explainer.expected_value)

[0.32955357 0.30758929 0.36285714]

sample_index = 0

for label_index in range(3):

# plot the importance for each label_index

shap.force_plot(

explainer.expected_value[label_index],

shap_values[label_index][sample_index],

X_test.iloc[sample_index ,:],

matplotlib=True,

show=False,

)

# use matplotlib for plot formatting

fig = plt.gcf()

fig.set_figheight(3)

fig.set_figwidth(7)

plt.show()

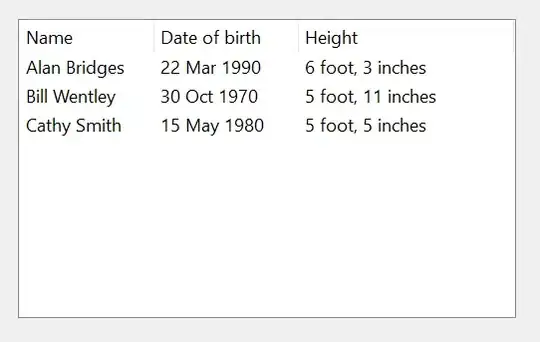

label_index: 0

label_index: 1

label_index: 2