I have a video layer I want to render onto an SCNPlane. It works on the Simulator, but not on the device.

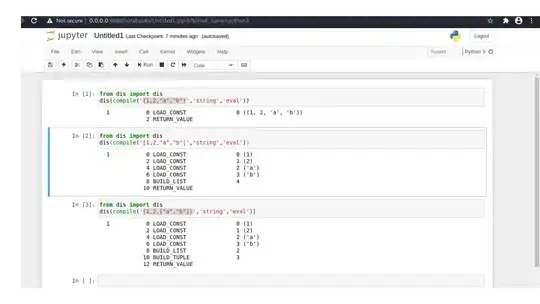

Here's a visual:

Here's the code:

//DISPLAY PLANE

SCNPlane * displayPlane = [SCNPlane planeWithWidth:displayWidth height:displayHeight];

displayPlane.cornerRadius = cornerRadius;

SCNNode * displayNode = [SCNNode nodeWithGeometry:displayPlane];

[scene.rootNode addChildNode:displayNode];

//apply material

SCNMaterial * displayMaterial = [SCNMaterial material];

displayMaterial.diffuse.contents = [[UIColor greenColor] colorWithAlphaComponent:1.0f];

[displayNode.geometry setMaterials:@[displayMaterial]];

//move to front + position for rim

displayNode.position = SCNVector3Make(0, rimTop - 0.08, /*0.2*/ 1);

//create video item

NSBundle * bundle = [NSBundle mainBundle];

NSString * path = [bundle pathForResource:@"tv_preview" ofType:@"mp4"];

NSURL * url = [NSURL fileURLWithPath:path];

AVAsset * asset = [AVAsset assetWithURL:url];

AVPlayerItem * item = [AVPlayerItem playerItemWithAsset:asset];

queue = [AVQueuePlayer playerWithPlayerItem:item];

looper = [AVPlayerLooper playerLooperWithPlayer:queue templateItem:item];

queue.muted = true;

layer = [AVPlayerLayer playerLayerWithPlayer:queue];

layer.frame = CGRectMake(0, 0, w, h);

layer.videoGravity = AVLayerVideoGravityResizeAspectFill;

displayMaterial.diffuse.contents = layer;

displayMaterial.doubleSided = true;

[queue play];

//[self.view.layer addSublayer:layer];

I can confirm that the actual plane exists (appears as green in the image above if avplayerlayer isn't applied to it) - first image above. If the video layer is added directly to the parent view layer (bottom line above) it runs fine - final image above. I thought it might be file system issue, but then I imagine (?) the video wouldn't play in the final image.

EDIT: setting queue (AVPlayer) directly works on Simulator, albeit ugly as hell, and crashes on Device, with following error log:

Error: Could not get pixel buffer (CVPixelBufferRef)

validateFunctionArguments:3797: failed assertion `Fragment Function(commonprofile_frag): incorrect type of texture (MTLTextureType2D) bound at texture binding at index 4 (expect MTLTextureTypeCube) for u_radianceTexture[0].'